Camera Aided Reconfigurable Intelligent Surfaces: Computer Vision Based Fast Beam Selection

Paper and Code

Nov 14, 2022

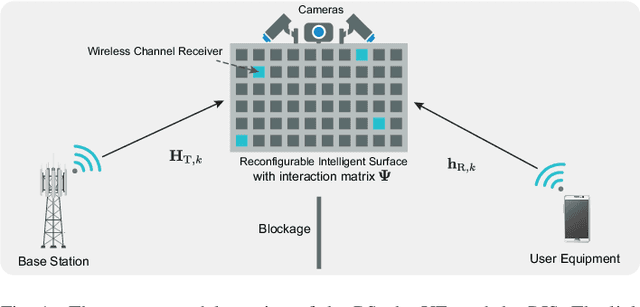

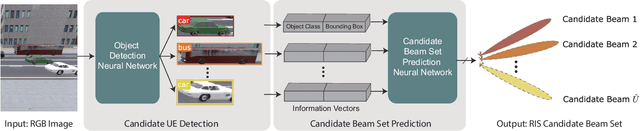

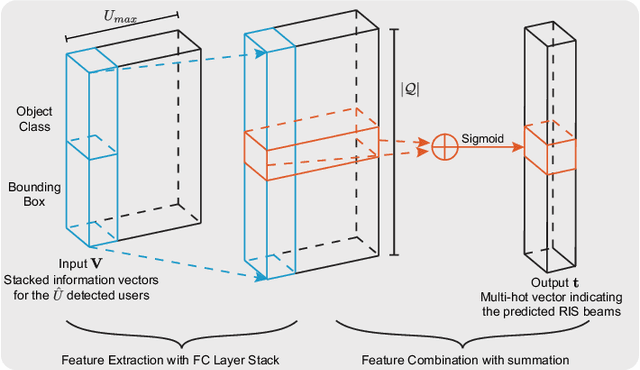

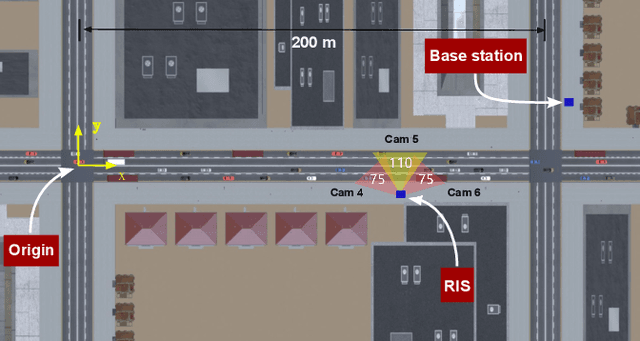

Reconfigurable intelligent surfaces (RISs) have attracted increasing interest due to their ability to improve the coverage, reliability, and energy efficiency of millimeter wave (mmWave) communication systems. However, designing the RIS beamforming typically requires large channel estimation or beam training overhead, which degrades the efficiency of these systems. In this paper, we propose to equip the RIS surfaces with visual sensors (cameras) that obtain sensing information about the surroundings and user/basestation locations, guide the RIS beam selection, and reduce the beam training overhead. We develop a machine learning (ML) framework that leverages this visual sensing information to efficiently select the optimal RIS reflection beams that reflect the signals between the basestation and mobile users. To evaluate the developed approach, we build a high-fidelity synthetic dataset that comprises co-existing wireless and visual data. Based on this dataset, the results show that the proposed vision-aided machine learning solution can accurately predict the RIS beams and achieve near-optimal achievable rate while significantly reducing the beam training overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge