CAKD: A Correlation-Aware Knowledge Distillation Framework Based on Decoupling Kullback-Leibler Divergence

Paper and Code

Oct 17, 2024

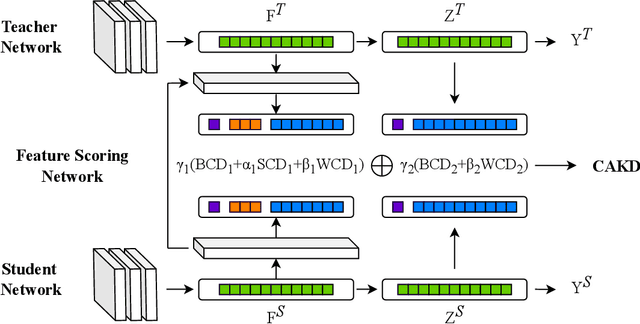

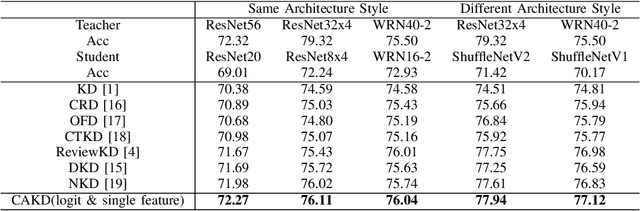

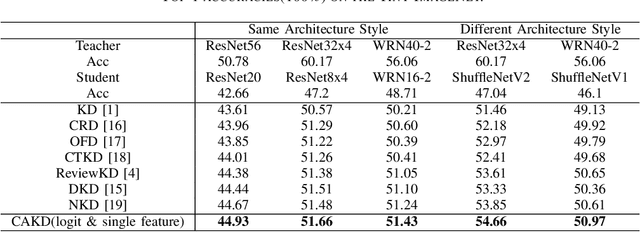

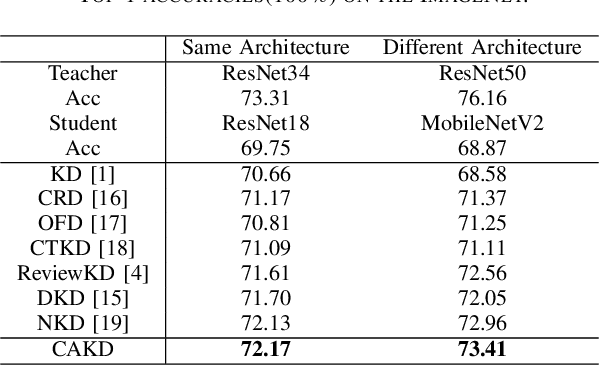

In knowledge distillation, a primary focus has been on transforming and balancing multiple distillation components. In this work, we emphasize the importance of thoroughly examining each distillation component, as we observe that not all elements are equally crucial. From this perspective,we decouple the Kullback-Leibler (KL) divergence into three unique elements: Binary Classification Divergence (BCD), Strong Correlation Divergence (SCD), and Weak Correlation Divergence (WCD). Each of these elements presents varying degrees of influence. Leveraging these insights, we present the Correlation-Aware Knowledge Distillation (CAKD) framework. CAKD is designed to prioritize the facets of the distillation components that have the most substantial influence on predictions, thereby optimizing knowledge transfer from teacher to student models. Our experiments demonstrate that adjusting the effect of each element enhances the effectiveness of knowledge transformation. Furthermore, evidence shows that our novel CAKD framework consistently outperforms the baseline across diverse models and datasets. Our work further highlights the importance and effectiveness of closely examining the impact of different parts of distillation process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge