C-SENN: Contrastive Self-Explaining Neural Network

Paper and Code

Jun 27, 2022

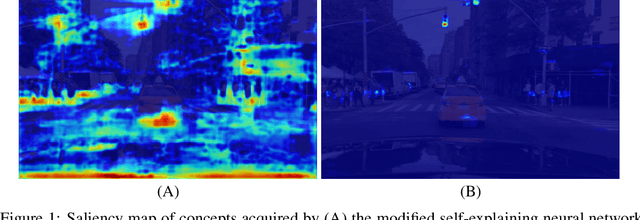

In this study, we use a self-explaining neural network (SENN), which learns unsupervised concepts, to acquire concepts that are easy for people to understand automatically. In concept learning, the hidden layer retains verbalizable features relevant to the output, which is crucial when adapting to real-world environments where explanations are required. However, it is known that the interpretability of concepts output by SENN is reduced in general settings, such as autonomous driving scenarios. Thus, this study combines contrastive learning with concept learning to improve the readability of concepts and the accuracy of tasks. We call this model Contrastive Self-Explaining Neural Network (C-SENN).

* 10 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge