By Fair Means or Foul: Quantifying Collusion in a Market Simulation with Deep Reinforcement Learning

Paper and Code

Jun 04, 2024

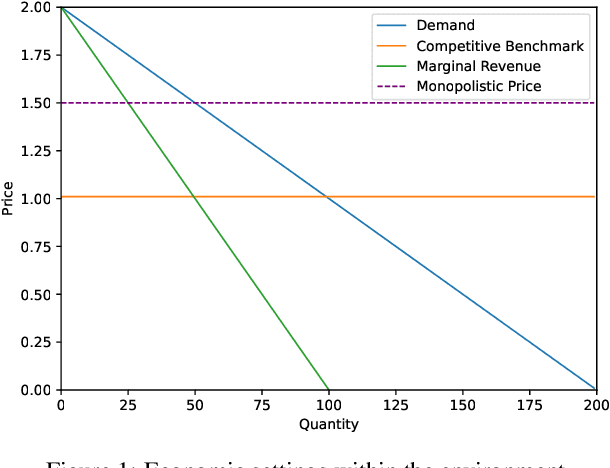

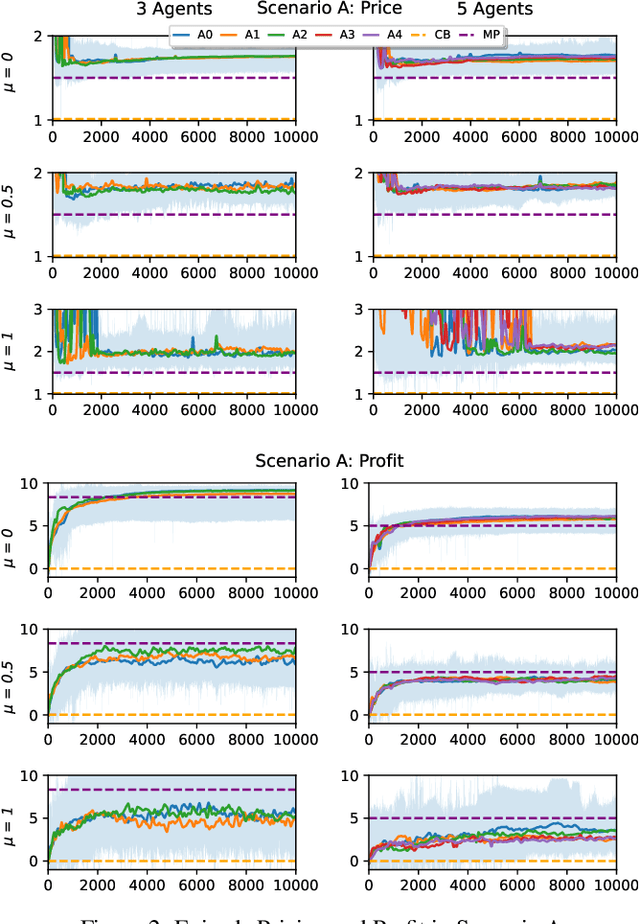

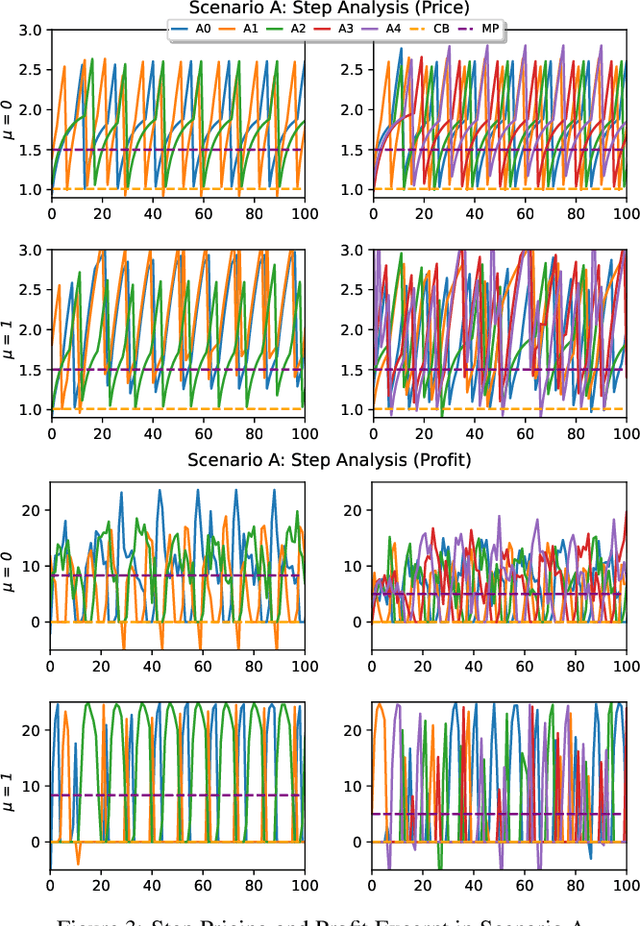

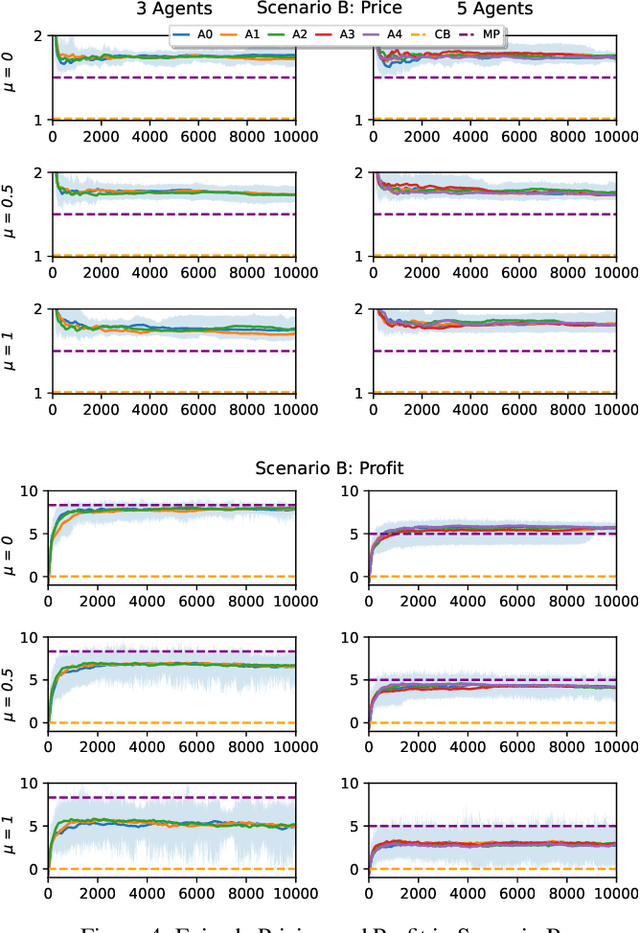

In the rapidly evolving landscape of eCommerce, Artificial Intelligence (AI) based pricing algorithms, particularly those utilizing Reinforcement Learning (RL), are becoming increasingly prevalent. This rise has led to an inextricable pricing situation with the potential for market collusion. Our research employs an experimental oligopoly model of repeated price competition, systematically varying the environment to cover scenarios from basic economic theory to subjective consumer demand preferences. We also introduce a novel demand framework that enables the implementation of various demand models, allowing for a weighted blending of different models. In contrast to existing research in this domain, we aim to investigate the strategies and emerging pricing patterns developed by the agents, which may lead to a collusive outcome. Furthermore, we investigate a scenario where agents cannot observe their competitors' prices. Finally, we provide a comprehensive legal analysis across all scenarios. Our findings indicate that RL-based AI agents converge to a collusive state characterized by the charging of supracompetitive prices, without necessarily requiring inter-agent communication. Implementing alternative RL algorithms, altering the number of agents or simulation settings, and restricting the scope of the agents' observation space does not significantly impact the collusive market outcome behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge