Building a Video-and-Language Dataset with Human Actions for Multimodal Logical Inference

Paper and Code

Jun 27, 2021

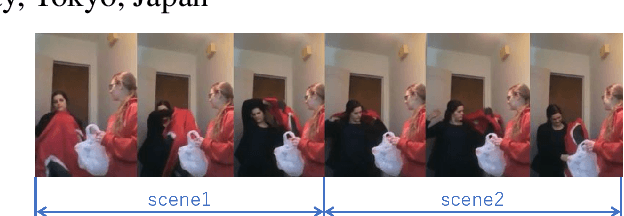

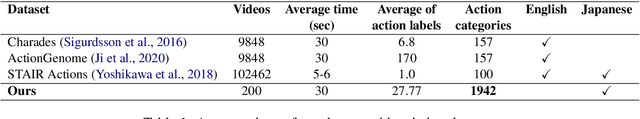

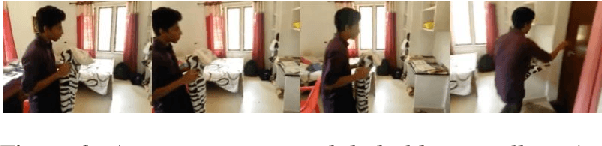

This paper introduces a new video-and-language dataset with human actions for multimodal logical inference, which focuses on intentional and aspectual expressions that describe dynamic human actions. The dataset consists of 200 videos, 5,554 action labels, and 1,942 action triplets of the form <subject, predicate, object> that can be translated into logical semantic representations. The dataset is expected to be useful for evaluating multimodal inference systems between videos and semantically complicated sentences including negation and quantification.

* Accepted to MMSR I

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge