Brain-inspired Multilayer Perceptron with Spiking Neurons

Paper and Code

Mar 28, 2022

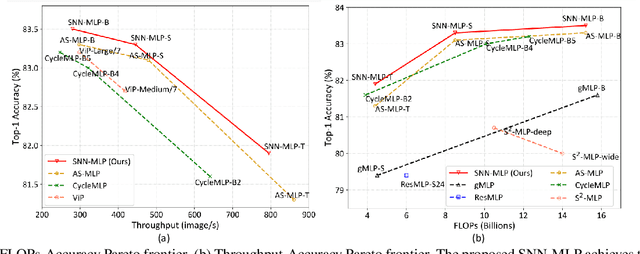

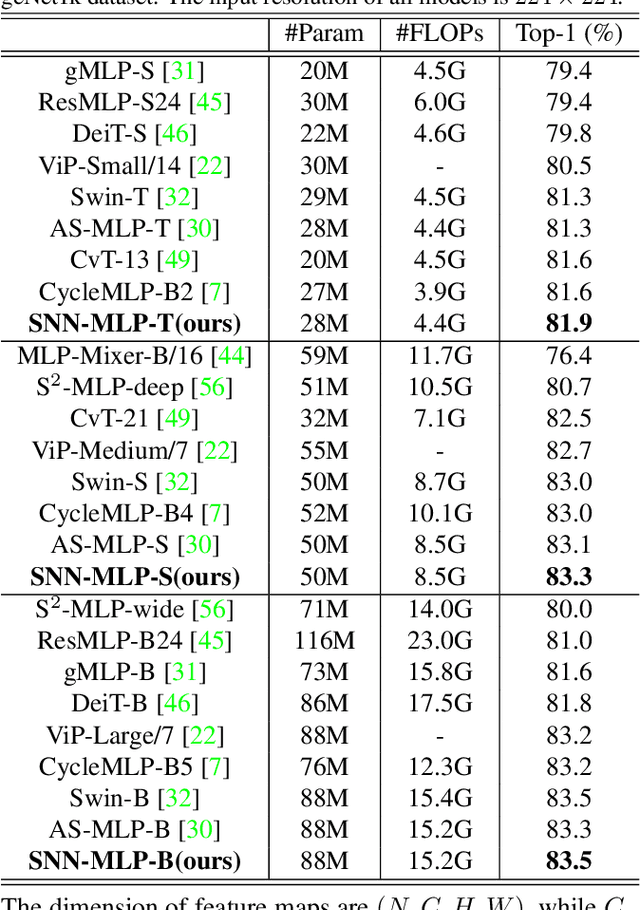

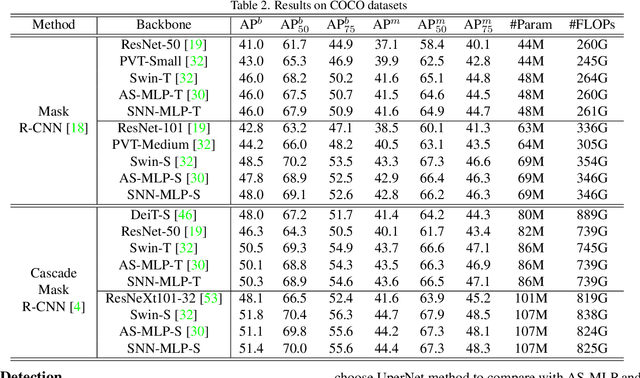

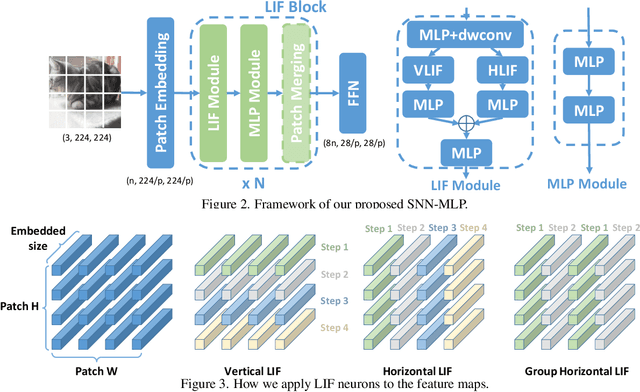

Recently, Multilayer Perceptron (MLP) becomes the hotspot in the field of computer vision tasks. Without inductive bias, MLPs perform well on feature extraction and achieve amazing results. However, due to the simplicity of their structures, the performance highly depends on the local features communication machenism. To further improve the performance of MLP, we introduce information communication mechanisms from brain-inspired neural networks. Spiking Neural Network (SNN) is the most famous brain-inspired neural network, and achieve great success on dealing with sparse data. Leaky Integrate and Fire (LIF) neurons in SNNs are used to communicate between different time steps. In this paper, we incorporate the machanism of LIF neurons into the MLP models, to achieve better accuracy without extra FLOPs. We propose a full-precision LIF operation to communicate between patches, including horizontal LIF and vertical LIF in different directions. We also propose to use group LIF to extract better local features. With LIF modules, our SNN-MLP model achieves 81.9%, 83.3% and 83.5% top-1 accuracy on ImageNet dataset with only 4.4G, 8.5G and 15.2G FLOPs, respectively, which are state-of-the-art results as far as we know.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge