BP-STDP: Approximating Backpropagation using Spike Timing Dependent Plasticity

Paper and Code

Mar 09, 2018

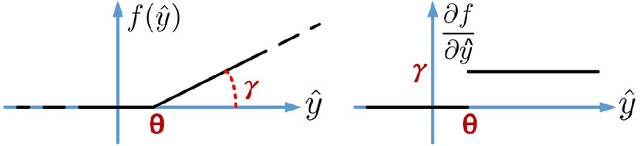

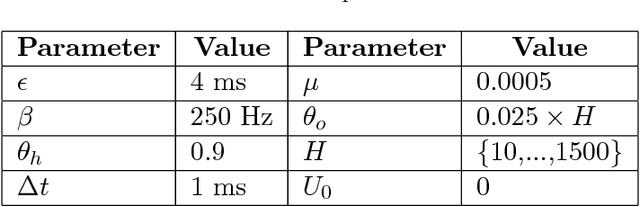

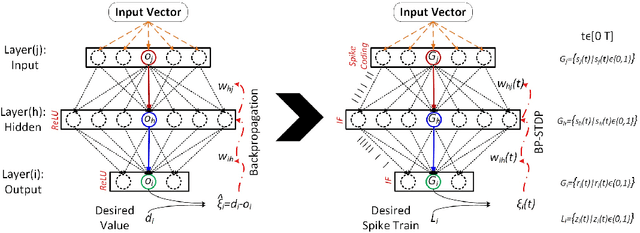

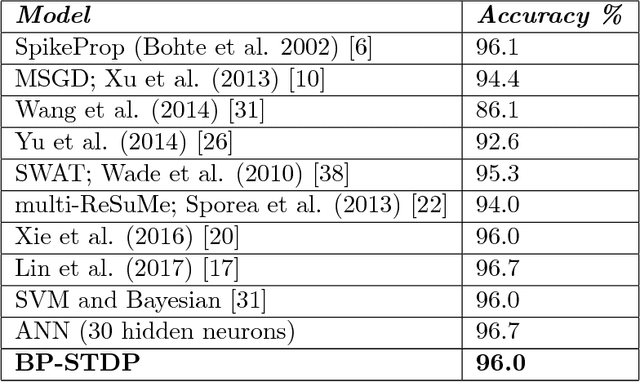

The problem of training spiking neural networks (SNNs) is a necessary precondition to understanding computations within the brain, a field still in its infancy. Previous work has shown that supervised learning in multi-layer SNNs enables bio-inspired networks to recognize patterns of stimuli through hierarchical feature acquisition. Although gradient descent has shown impressive performance in multi-layer (and deep) SNNs, it is generally not considered biologically plausible and is also computationally expensive. This paper proposes a novel supervised learning approach based on an event-based spike-timing-dependent plasticity (STDP) rule embedded in a network of integrate-and-fire (IF) neurons. The proposed temporally local learning rule follows the backpropagation weight change updates applied at each time step. This approach enjoys benefits of both accurate gradient descent and temporally local, efficient STDP. Thus, this method is able to address some open questions regarding accurate and efficient computations that occur in the brain. The experimental results on the XOR problem, the Iris data, and the MNIST dataset demonstrate that the proposed SNN performs as successfully as the traditional NNs. Our approach also compares favorably with the state-of-the-art multi-layer SNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge