BoxMap: Efficient Structural Mapping and Navigation

Paper and Code

Oct 08, 2024

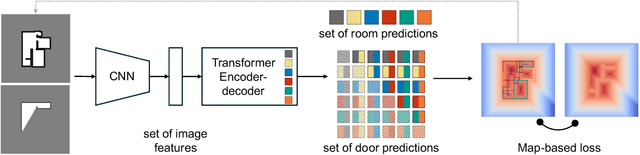

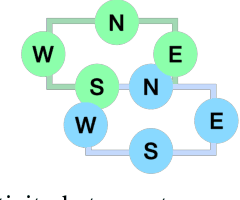

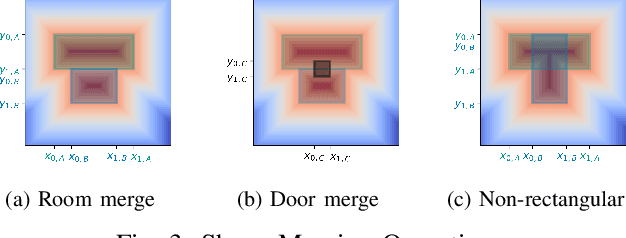

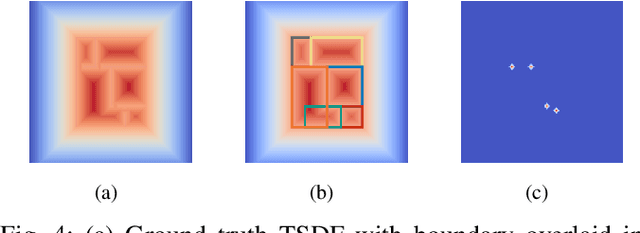

While humans can successfully navigate using abstractions, ignoring details that are irrelevant to the task at hand, most existing robotic applications require the maintenance of a detailed environment representation which consumes a significant amount of sensing, computing, and storage. These issues are particularly important in a resource-constrained setting with limited power budget. Deep learning methods can learn from prior experience to abstract knowledge of unknown environments, and use it to execute tasks (e.g., frontier exploration, object search, or scene understanding) more efficiently. We propose BoxMap, a Detection-Transformer-based architecture that takes advantage of the structure of the sensed partial environment to update a topological graph of the environment as a set of semantic entities (e.g. rooms and doors) and their relations (e.g. connectivity). These predictions from low-level measurements can then be leveraged to achieve high-level goals with lower computational costs than methods based on detailed representations. As an example application, we consider a robot equipped with a 2-D laser scanner tasked with exploring a residential building. Our BoxMap representation scales quadratically with the number of rooms (with a small constant), resulting in significant savings over a full geometric map. Moreover, our high-level topological representation results in 30.9% shorter trajectories in the exploration task with respect to a standard method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge