Boundary Attack++: Query-Efficient Decision-Based Adversarial Attack

Paper and Code

Apr 03, 2019

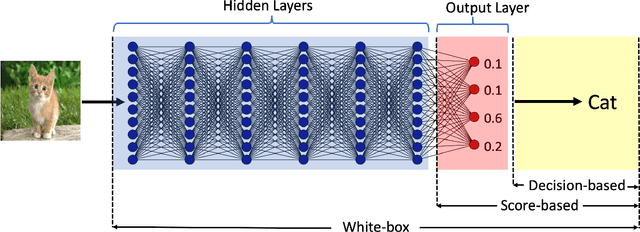

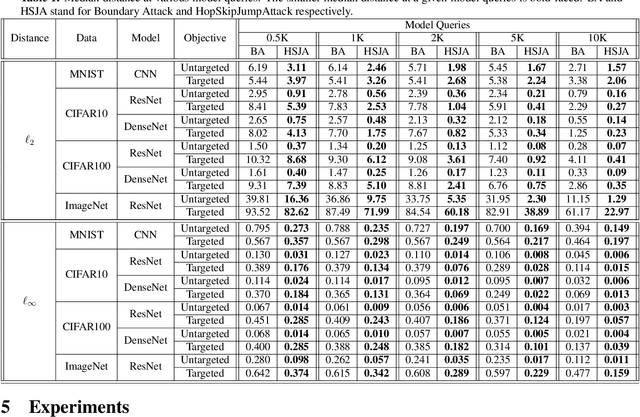

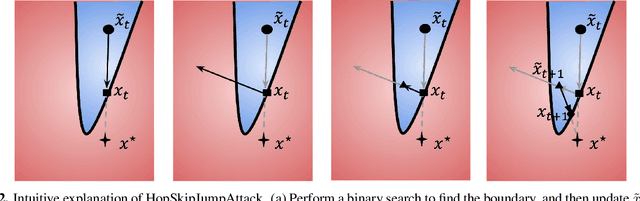

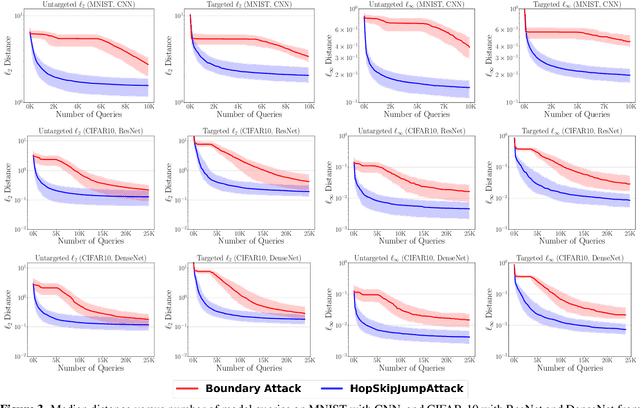

Decision-based adversarial attack studies the generation of adversarial examples that solely rely on output labels of a target model. In this paper, decision-based adversarial attack was formulated as an optimization problem. Motivated by zeroth-order optimization, we develop Boundary Attack++, a family of algorithms based on a novel estimate of gradient direction using binary information at the decision boundary. By switching between two types of projection operators, our algorithms are capable of optimizing $L_2$ and $L_\infty$ distances respectively. Experiments show Boundary Attack++ requires significantly fewer model queries than Boundary Attack. We also show our algorithm achieves superior performance compared to state-of-the-art white-box algorithms in attacking adversarially trained models on MNIST.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge