BODAME: Bilevel Optimization for Defense Against Model Extraction

Paper and Code

Mar 11, 2021

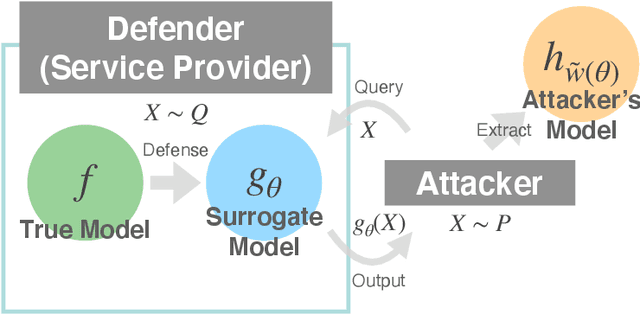

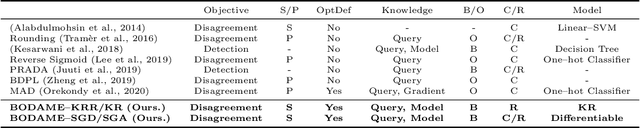

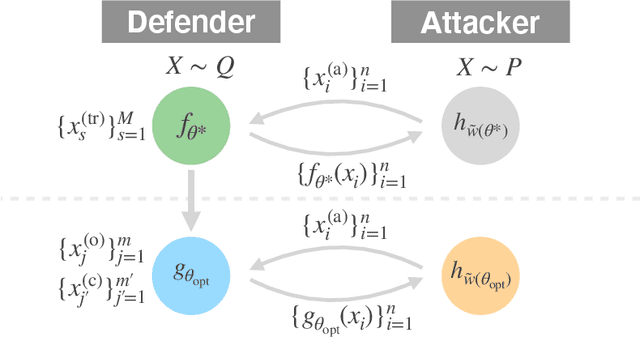

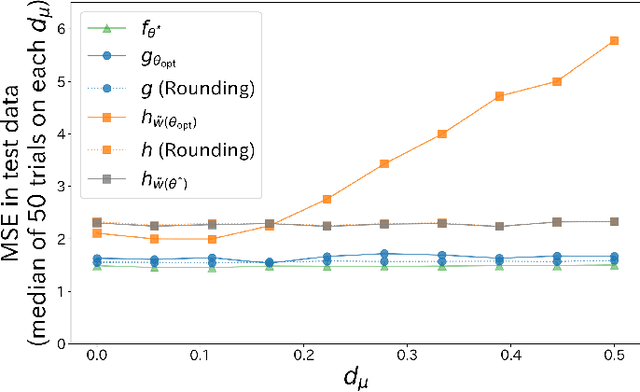

Model extraction attacks have become serious issues for service providers using machine learning. We consider an adversarial setting to prevent model extraction under the assumption that attackers will make their best guess on the service provider's model using query accesses, and propose to build a surrogate model that significantly keeps away the predictions of the attacker's model from those of the true model. We formulate the problem as a non-convex constrained bilevel optimization problem and show that for kernel models, it can be transformed into a non-convex 1-quadratically constrained quadratic program with a polynomial-time algorithm to find the global optimum. Moreover, we give a tractable transformation and an algorithm for more complicated models that are learned by using stochastic gradient descent-based algorithms. Numerical experiments show that the surrogate model performs well compared with existing defense models when the difference between the attacker's and service provider's distributions is large. We also empirically confirm the generalization ability of the surrogate model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge