Blurring Fools the Network -- Adversarial Attacks by Feature Peak Suppression and Gaussian Blurring

Paper and Code

Dec 21, 2020

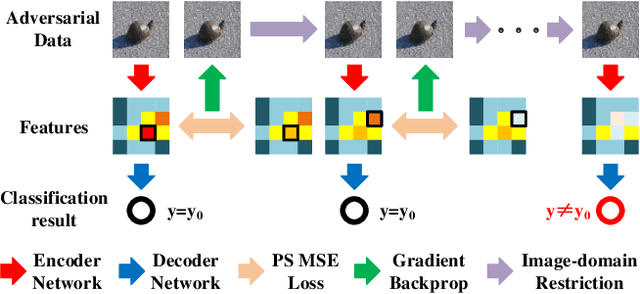

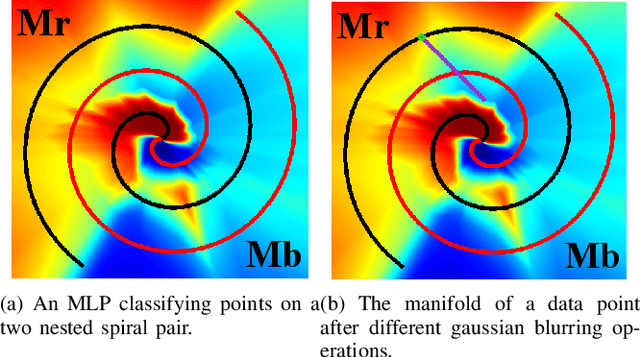

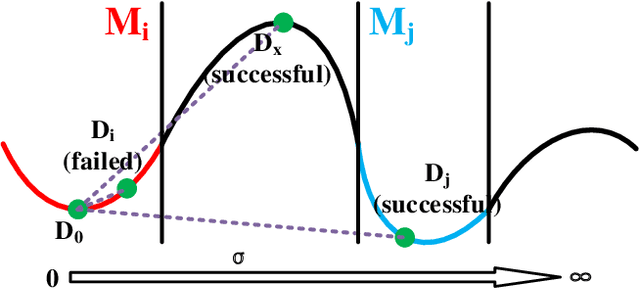

Existing pixel-level adversarial attacks on neural networks may be deficient in real scenarios, since pixel-level changes on the data cannot be fully delivered to the neural network after camera capture and multiple image preprocessing steps. In contrast, in this paper, we argue from another perspective that gaussian blurring, a common technique of image preprocessing, can be aggressive itself in specific occasions, thus exposing the network to real-world adversarial attacks. We first propose an adversarial attack demo named peak suppression (PS) by suppressing the values of peak elements in the features of the data. Based on the blurring spirit of PS, we further apply gaussian blurring to the data, to investigate the potential influence and threats of gaussian blurring to performance of the network. Experiment results show that PS and well-designed gaussian blurring can form adversarial attacks that completely change classification results of a well-trained target network. With the strong physical significance and wide applications of gaussian blurring, the proposed approach will also be capable of conducting real world attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge