Blockchain-based Optimized Client Selection and Privacy Preserved Framework for Federated Learning

Paper and Code

Jul 25, 2023

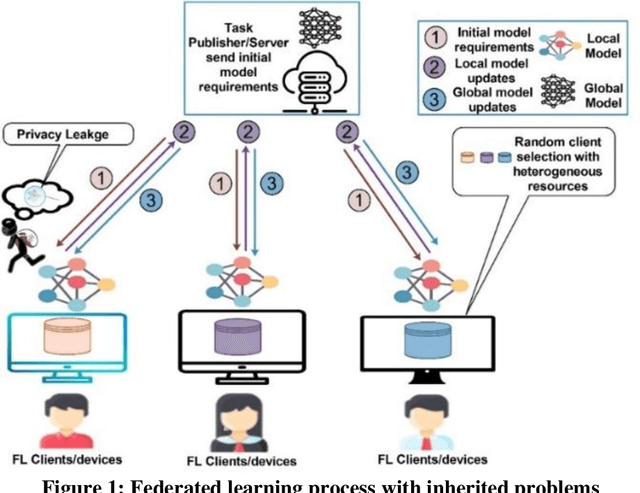

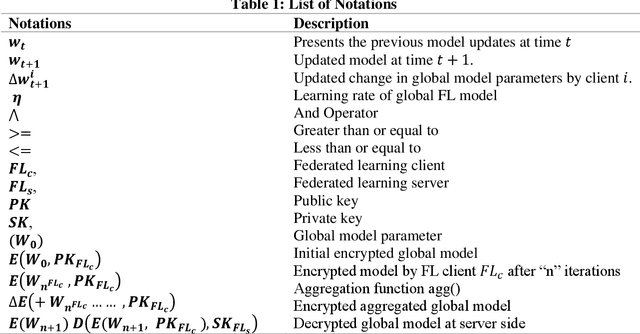

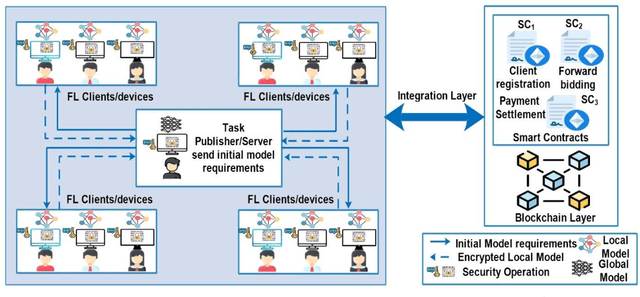

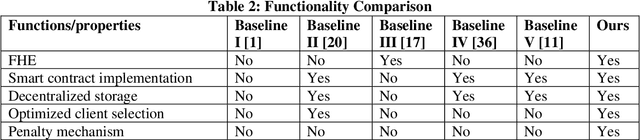

Federated learning is a distributed mechanism that trained large-scale neural network models with the participation of multiple clients and data remains on their devices, only sharing the local model updates. With this feature, federated learning is considered a secure solution for data privacy issues. However, the typical FL structure relies on the client-server, which leads to the single-point-of-failure (SPoF) attack, and the random selection of clients for model training compromised the model accuracy. Furthermore, adversaries try for inference attacks i.e., attack on privacy leads to gradient leakage attacks. We proposed the blockchain-based optimized client selection and privacy-preserved framework in this context. We designed the three kinds of smart contracts such as 1) registration of clients 2) forward bidding to select optimized clients for FL model training 3) payment settlement and reward smart contracts. Moreover, fully homomorphic encryption with Cheon, Kim, Kim, and Song (CKKS) method is implemented before transmitting the local model updates to the server. Finally, we evaluated our proposed method on the benchmark dataset and compared it with state-of-the-art studies. Consequently, we achieved a higher accuracy rate and privacy-preserved FL framework with decentralized nature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge