Blissful Ignorance: Anti-Transfer Learning for Task Invariance

Paper and Code

Jun 11, 2020

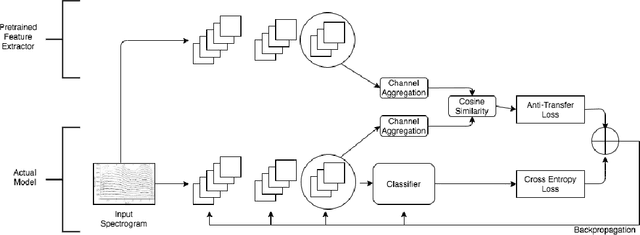

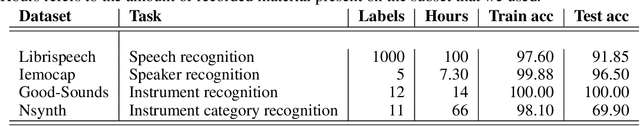

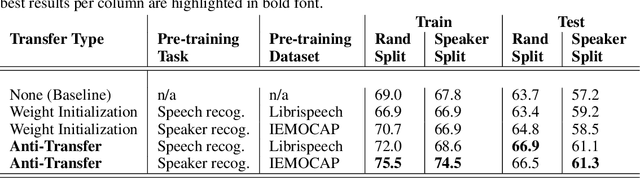

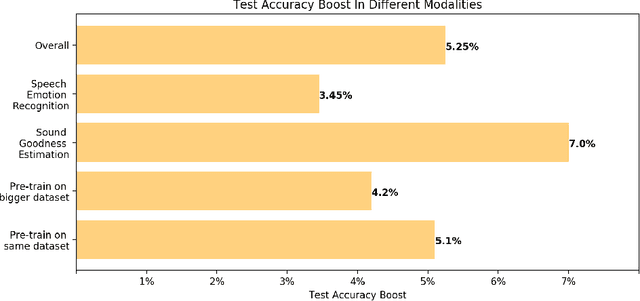

We introduce the novel concept of anti-transfer learning for neural networks. While standard transfer learning assumes that the representations learned in one task will be useful for another task, anti-transfer learning avoids learning representations that have been learned for a different task, which is not relevant and potentially misleading for the new task and should be ignored. Examples of such tasks are style vs content recognition or pitch vs timbre from audio. By penalizing similarity between the second network and the previously learned features, co-incidental correlations between the target and the unrelated task can be avoided, yielding more reliable representations and better performance on the target task. We implemented anti-transfer learning with different similarity metrics and aggregation functions. We evaluate the approach in the audio domain with different tasks and setups, using four datasets in total. The results show that anti-transfer learning consistently improves accuracy in all test cases, proving that it can push the network to learn more representative features for the task at hand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge