Bisimulation-based Approximate Lifted Inference

Paper and Code

May 09, 2012

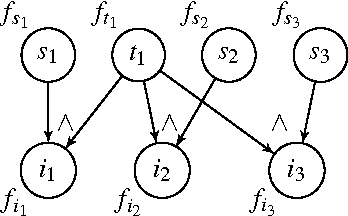

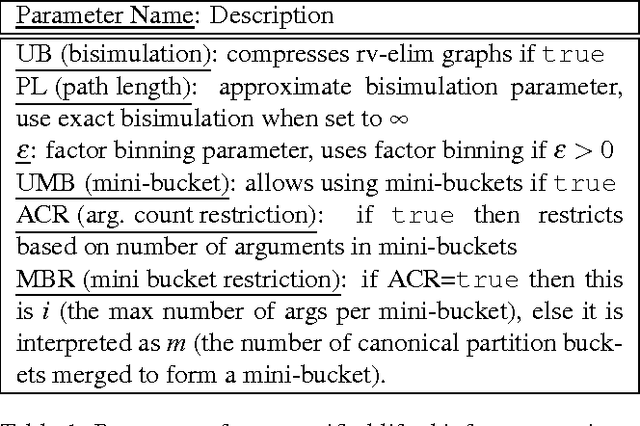

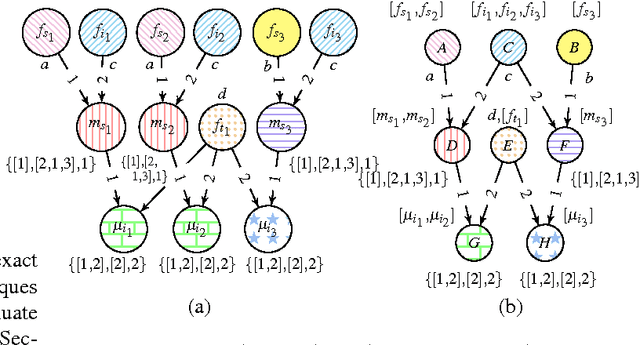

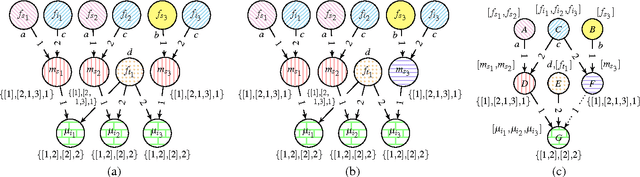

There has been a great deal of recent interest in methods for performing lifted inference; however, most of this work assumes that the first-order model is given as input to the system. Here, we describe lifted inference algorithms that determine symmetries and automatically lift the probabilistic model to speedup inference. In particular, we describe approximate lifted inference techniques that allow the user to trade off inference accuracy for computational efficiency by using a handful of tunable parameters, while keeping the error bounded. Our algorithms are closely related to the graph-theoretic concept of bisimulation. We report experiments on both synthetic and real data to show that in the presence of symmetries, run-times for inference can be improved significantly, with approximate lifted inference providing orders of magnitude speedup over ground inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge