BiQUE: Biquaternionic Embeddings of Knowledge Graphs

Paper and Code

Sep 29, 2021

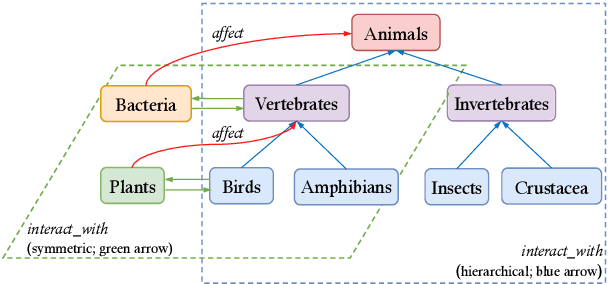

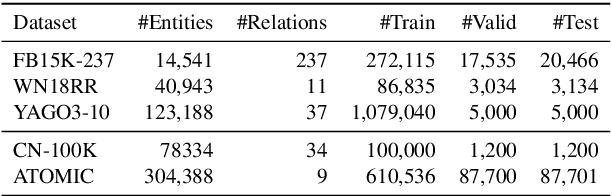

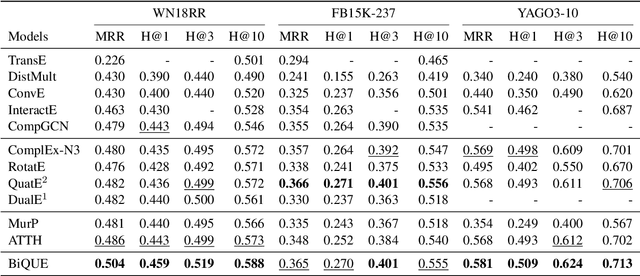

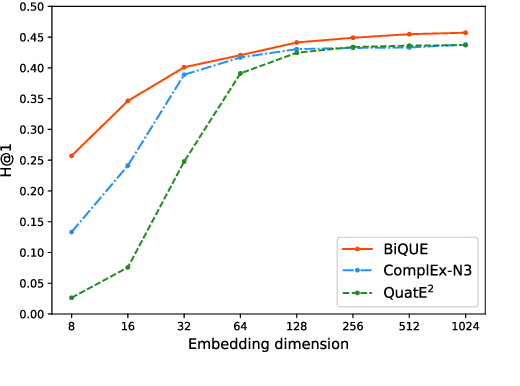

Knowledge graph embeddings (KGEs) compactly encode multi-relational knowledge graphs (KGs). Existing KGE models rely on geometric operations to model relational patterns. Euclidean (circular) rotation is useful for modeling patterns such as symmetry, but cannot represent hierarchical semantics. In contrast, hyperbolic models are effective at modeling hierarchical relations, but do not perform as well on patterns on which circular rotation excels. It is crucial for KGE models to unify multiple geometric transformations so as to fully cover the multifarious relations in KGs. To do so, we propose BiQUE, a novel model that employs biquaternions to integrate multiple geometric transformations, viz., scaling, translation, Euclidean rotation, and hyperbolic rotation. BiQUE makes the best trade-offs among geometric operators during training, picking the best one (or their best combination) for each relation. Experiments on five datasets show BiQUE's effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge