Bi-stochastically normalized graph Laplacian: convergence to manifold Laplacian and robustness to outlier noise

Paper and Code

Jun 22, 2022

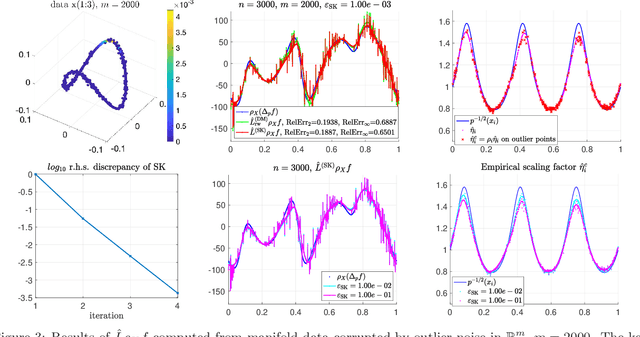

Bi-stochastic normalization of kernelized graph affinity matrix provides an alternative normalization scheme for graph Laplacian methods in graph-based data analysis and can be computed efficiently by Sinkhorn-Knopp (SK) iterations in practice. This paper proves the convergence of the bi-stochastically normalized graph Laplacian to manifold (weighted-)Laplacian with rates when $n$ data points are i.i.d. sampled from a general $d$-dimensional manifold embedded in a possibly high-dimensional space. Under certain joint limit of $n \to \infty$ and kernel bandwidth $\epsilon \to 0$, the point-wise convergence rate of the graph Laplacian operator (under 2-norm) is proved to be $ O( n^{-1/(d/2+3)})$ at finite large $n$ up to log factors, achieved at the scaling of $\epsilon \sim n^{-1/(d/2+3)} $. When the manifold data are corrupted by outlier noise, we theoretically prove the graph Laplacian point-wise consistency which matches the rate for clean manifold data up to an additional error term proportional to the boundedness of mutual inner-products of the noise vectors. Our analysis suggests that, under the setting being considered in this paper, not exact bi-stochastic normalization but an approximate one will achieve the same consistency rate. Motivated by the analysis, we propose an approximate and constrained matrix scaling problem that can be solved by SK iterations with early termination, and apply to simulated manifold data both clean and with outlier noise. Numerical experiments support our theoretical results and show the robustness of bi-stochastically normalized graph Laplacian to outlier noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge