Beyond Voice Identity Conversion: Manipulating Voice Attributes by Adversarial Learning of Structured Disentangled Representations

Paper and Code

Jul 27, 2021

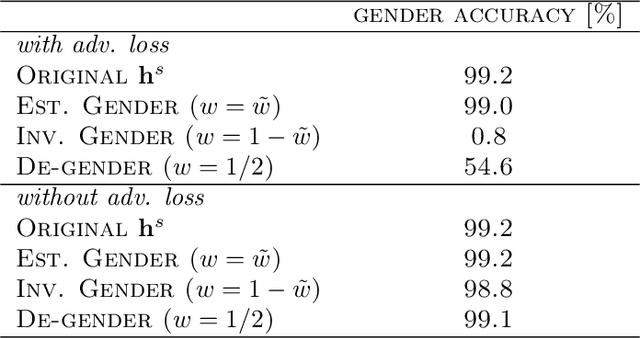

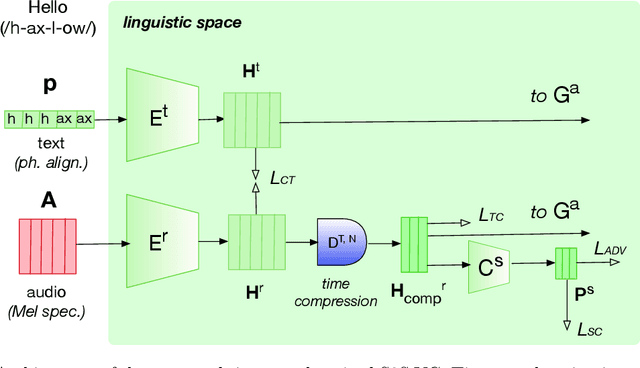

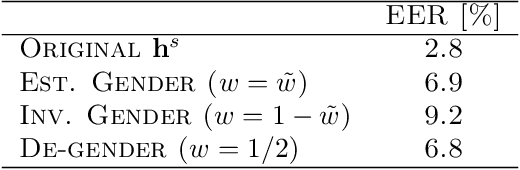

Voice conversion (VC) consists of digitally altering the voice of an individual to manipulate part of its content, primarily its identity, while maintaining the rest unchanged. Research in neural VC has accomplished considerable breakthroughs with the capacity to falsify a voice identity using a small amount of data with a highly realistic rendering. This paper goes beyond voice identity and presents a neural architecture that allows the manipulation of voice attributes (e.g., gender and age). Leveraging the latest advances on adversarial learning of structured speech representation, a novel structured neural network is proposed in which multiple auto-encoders are used to encode speech as a set of idealistically independent linguistic and extra-linguistic representations, which are learned adversariarly and can be manipulated during VC. Moreover, the proposed architecture is time-synchronized so that the original voice timing is preserved during conversion which allows lip-sync applications. Applied to voice gender conversion on the real-world VCTK dataset, our proposed architecture can learn successfully gender-independent representation and convert the voice gender with a very high efficiency and naturalness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge