Beyond NDCG: behavioral testing of recommender systems with RecList

Paper and Code

Nov 18, 2021

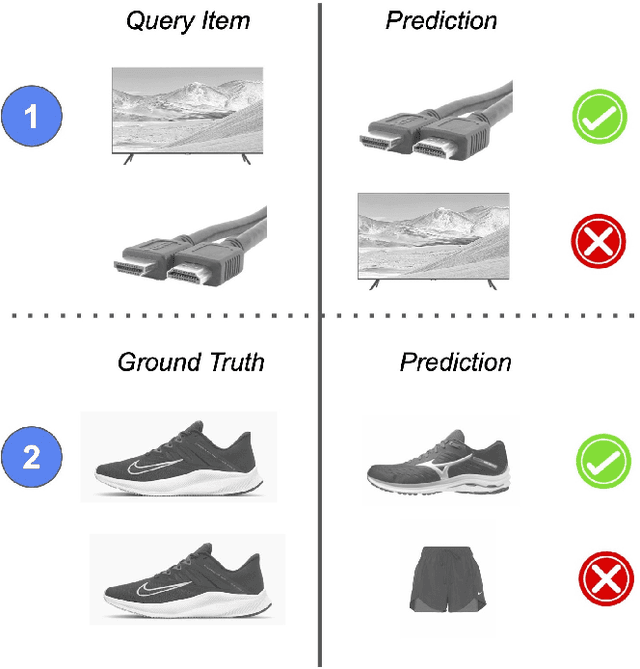

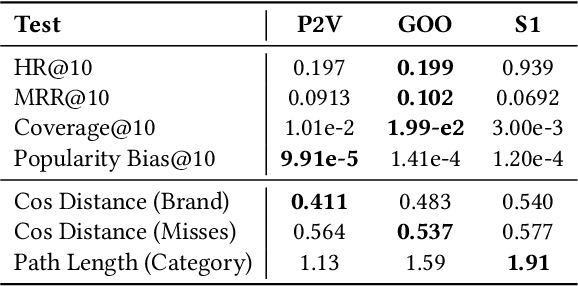

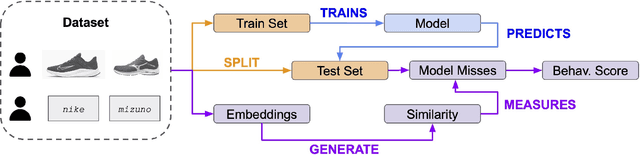

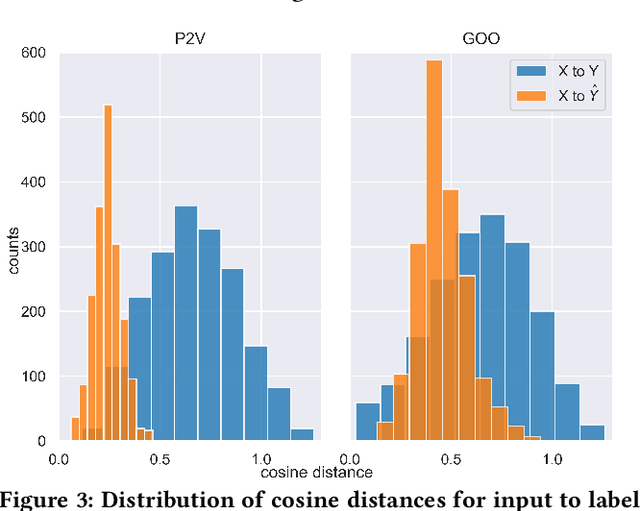

As with most Machine Learning systems, recommender systems are typically evaluated through performance metrics computed over held-out data points. However, real-world behavior is undoubtedly nuanced: ad hoc error analysis and deployment-specific tests must be employed to ensure the desired quality in actual deployments. In this paper, we propose RecList, a behavioral-based testing methodology. RecList organizes recommender systems by use case and introduces a general plug-and-play procedure to scale up behavioral testing. We demonstrate its capabilities by analyzing known algorithms and black-box commercial systems, and we release RecList as an open source, extensible package for the community.

* Alpha draft

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge