Beyond Heisenberg Limit Quantum Metrology through Quantum Signal Processing

Paper and Code

Sep 22, 2022

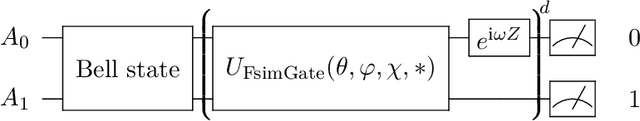

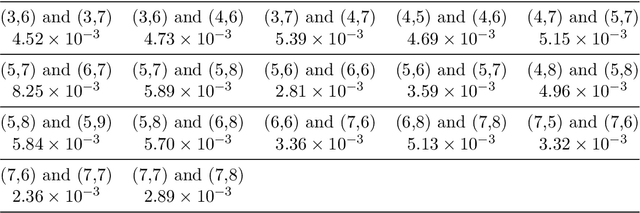

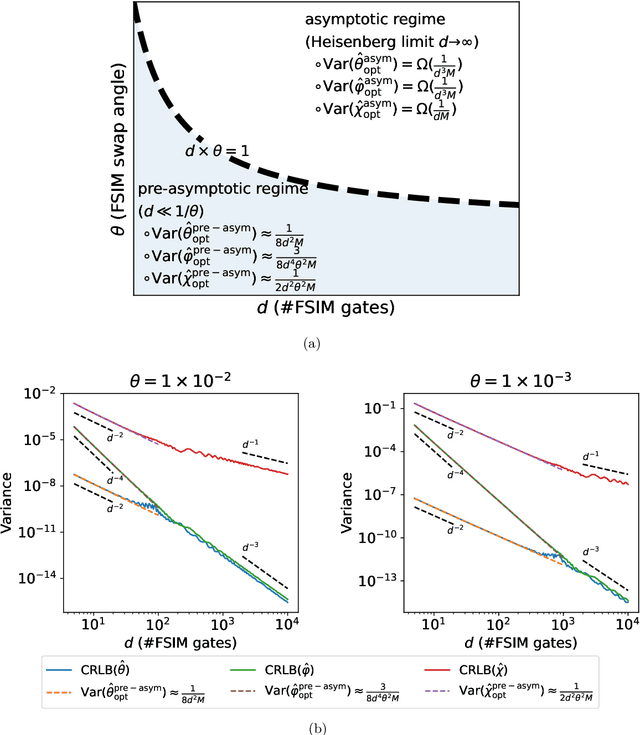

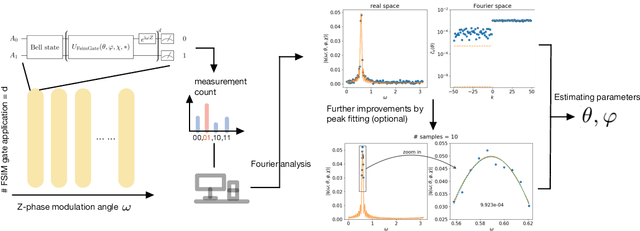

Leveraging quantum effects in metrology such as entanglement and coherence allows one to measure parameters with enhanced sensitivity. However, time-dependent noise can disrupt such Heisenberg-limited amplification. We propose a quantum-metrology method based on the quantum-signal-processing framework to overcome these realistic noise-induced limitations in practical quantum metrology. Our algorithm separates the gate parameter $\varphi$~(single-qubit Z phase) that is susceptible to time-dependent error from the target gate parameter $\theta$~(swap-angle between |10> and |01> states) that is largely free of time-dependent error. Our method achieves an accuracy of $10^{-4}$ radians in standard deviation for learning $\theta$ in superconducting-qubit experiments, outperforming existing alternative schemes by two orders of magnitude. We also demonstrate the increased robustness in learning time-dependent gate parameters through fast Fourier transformation and sequential phase difference. We show both theoretically and numerically that there is an interesting transition of the optimal metrology variance scaling as a function of circuit depth $d$ from the pre-asymptotic regime $d \ll 1/\theta$ to Heisenberg limit $d \to \infty$. Remarkably, in the pre-asymptotic regime our method's estimation variance on time-sensitive parameter $\varphi$ scales faster than the asymptotic Heisenberg limit as a function of depth, $\text{Var}(\hat{\varphi})\approx 1/d^4$. Our work is the first quantum-signal-processing algorithm that demonstrates practical application in laboratory quantum computers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge