Beyond Class-Conditional Assumption: A Primary Attempt to Combat Instance-Dependent Label Noise

Paper and Code

Dec 10, 2020

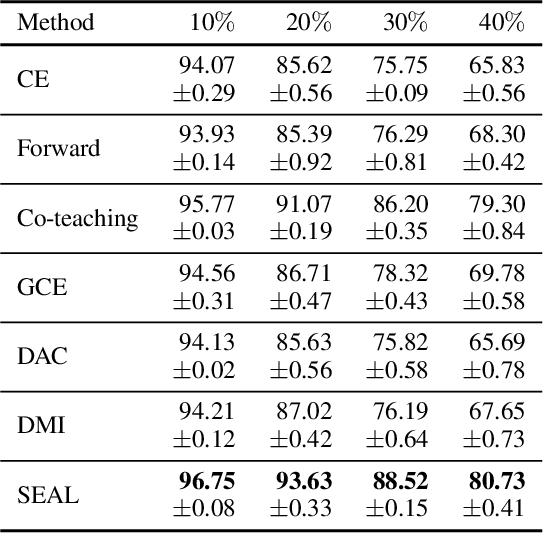

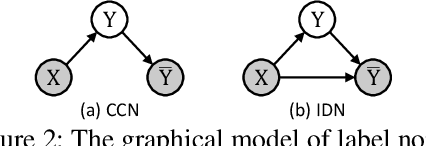

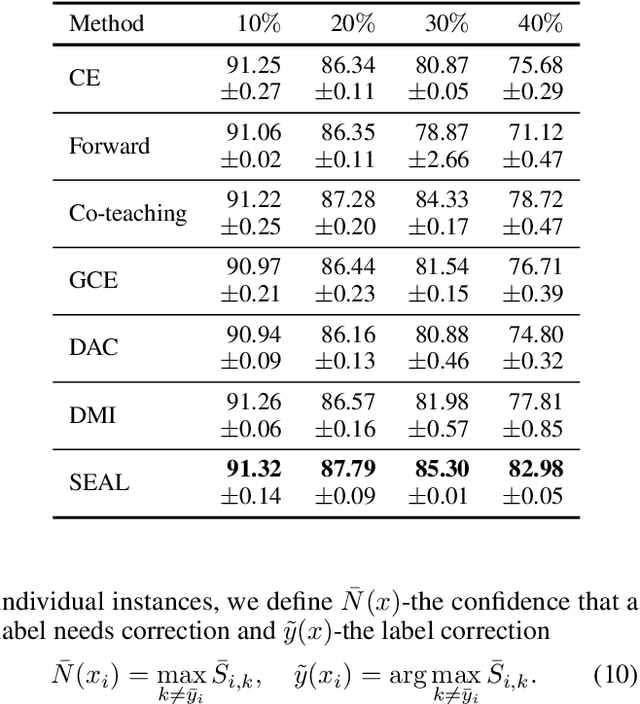

Supervised learning under label noise has seen numerous advances recently, while existing theoretical findings and empirical results broadly build up on the class-conditional noise (CCN) assumption that the noise is independent of input features given the true label. In this work, we present a theoretical hypothesis testing and prove that noise in real-world dataset is unlikely to be CCN, which confirms that label noise should depend on the instance and justifies the urgent need to go beyond the CCN assumption.The theoretical results motivate us to study the more general and practical-relevant instance-dependent noise (IDN). To stimulate the development of theory and methodology on IDN, we formalize an algorithm to generate controllable IDN and present both theoretical and empirical evidence to show that IDN is semantically meaningful and challenging. As a primary attempt to combat IDN, we present a tiny algorithm termed self-evolution average label (SEAL), which not only stands out under IDN with various noise fractions, but also improves the generalization on real-world noise benchmark Clothing1M. Our code is released. Notably, our theoretical analysis in Section 2 provides rigorous motivations for studying IDN, which is an important topic that deserves more research attention in future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge