Beyond accuracy: quantifying trial-by-trial behaviour of CNNs and humans by measuring error consistency

Paper and Code

Jun 30, 2020

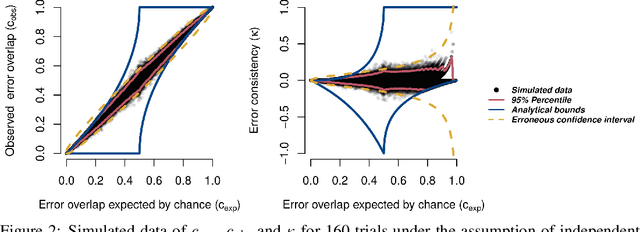

A central problem in cognitive science and behavioural neuroscience as well as in machine learning and artificial intelligence research is to ascertain whether two or more decision makers (e.g. brains or algorithms) use the same strategy. Accuracy alone cannot distinguish between strategies: two systems may achieve similar accuracy with very different strategies. The need to differentiate beyond accuracy is particularly pressing if two systems are at or near ceiling performance, like Convolutional Neural Networks (CNNs) and humans on visual object recognition. Here we introduce trial-by-trial error consistency, a quantitative analysis for measuring whether two decision making systems systematically make errors on the same inputs. Making consistent errors on a trial-by-trial basis is a necessary condition if we want to ascertain similar processing strategies between decision makers. Our analysis is applicable to compare algorithms with algorithms, humans with humans, and algorithms with humans. When applying error consistency to visual object recognition we obtain three main findings: (1.) Irrespective of architecture, CNNs are remarkably consistent with one another (2.) The consistency between CNNs and human observers, however, is little above what can be expected by chance alone--indicating that humans and CNNs are likely implementing very different strategies (3.) CORnet-S, a recurrent model termed the "current best model of the primate ventral visual stream", fails to capture essential characteristics of human behavioural data and behaves essentially like a ResNet-50 in our analysis--that is, just like a standard feedforward network. Taken together, error consistency analysis suggests that the strategies used by human and machine vision are still very different--but we envision our general-purpose error consistency analysis to serve as a fruitful tool for quantifying future progress.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge