Between steps: Intermediate relaxations between big-M and convex hull formulations

Paper and Code

Jan 29, 2021

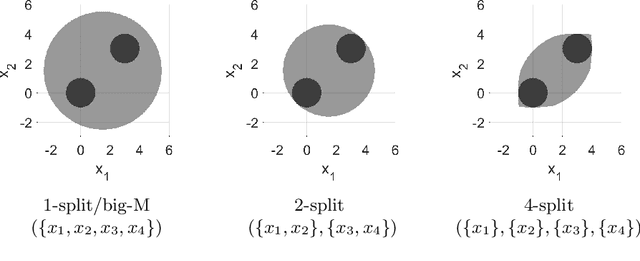

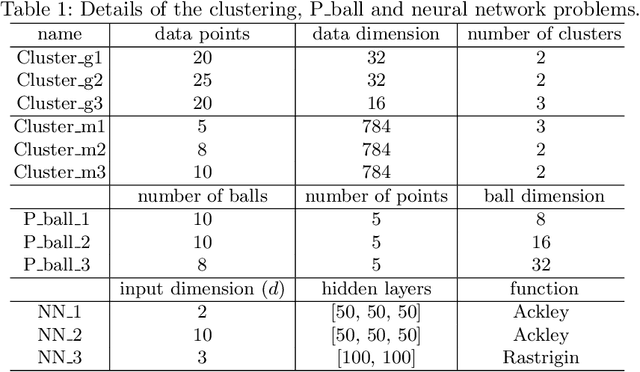

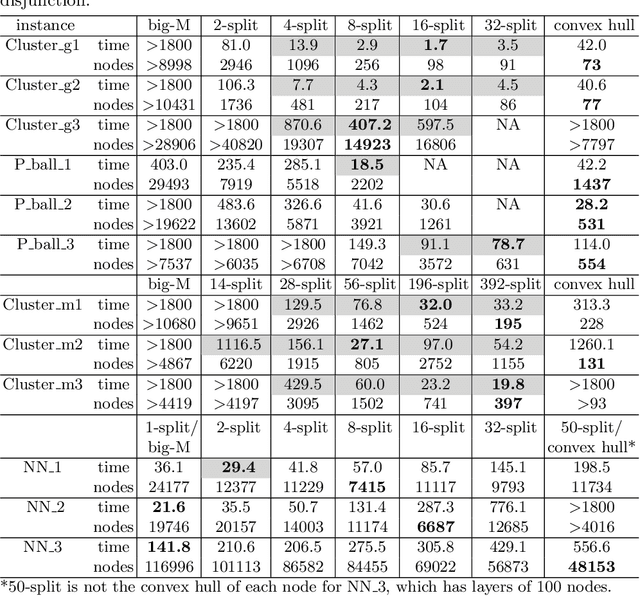

This work develops a class of relaxations in between the big-M and convex hull formulations of disjunctions, drawing advantages from both. The proposed "P-split" formulations split convex additively separable constraints into P partitions and form the convex hull of the partitioned disjuncts. Parameter P represents the trade-off of model size vs. relaxation strength. We examine the novel formulations and prove that, under certain assumptions, the relaxations form a hierarchy starting from a big-M equivalent and converging to the convex hull. We computationally compare the proposed formulations to big-M and convex hull formulations on a test set including: K-means clustering, P_ball problems, and ReLU neural networks. The computational results show that the intermediate P-split formulations can form strong outer approximations of the convex hull with fewer variables and constraints than the extended convex hull formulations, giving significant computational advantages over both the big-M and convex hull.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge