Benchmarking Long-tail Generalization with Likelihood Splits

Paper and Code

Oct 13, 2022

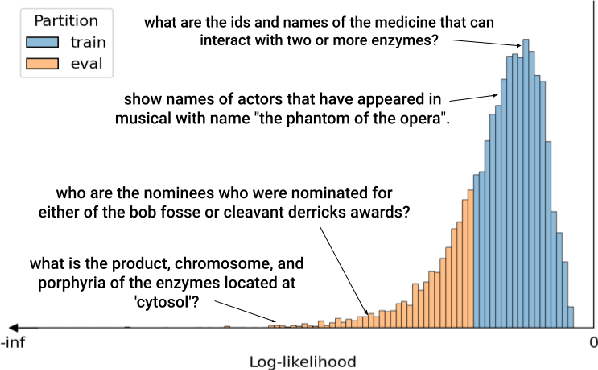

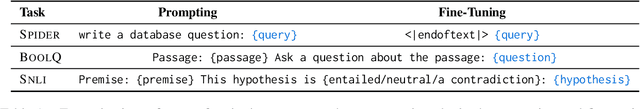

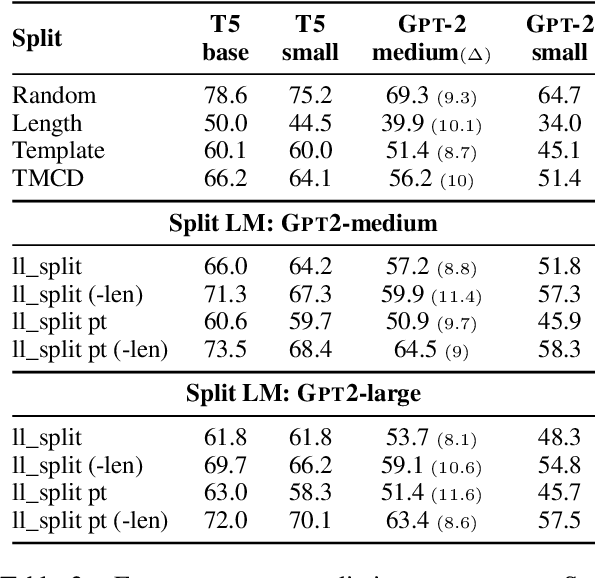

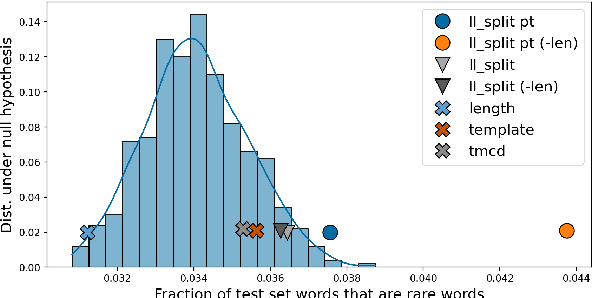

In order to reliably process natural language, NLP systems must generalize to the long tail of rare utterances. We propose a method to create challenging benchmarks that require generalizing to the tail of the distribution by re-splitting existing datasets. We create 'Likelihood splits' where examples that are assigned lower likelihood by a pre-trained language model (LM) are placed in the test set, and more likely examples are in the training set. This simple approach can be customized to construct meaningful train-test splits for a wide range of tasks. Likelihood splits are more challenging than random splits: relative error rates of state-of-the-art models on our splits increase by 59% for semantic parsing on Spider, 77% for natural language inference on SNLI, and 38% for yes/no question answering on BoolQ compared with the corresponding random splits. Moreover, Likelihood splits create fairer benchmarks than adversarial filtering; when the LM used to create the splits is used as the task model, our splits do not adversely penalize the LM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge