Benchmarking Differentially Private Residual Networks for Medical Imagery

Paper and Code

Jun 28, 2020

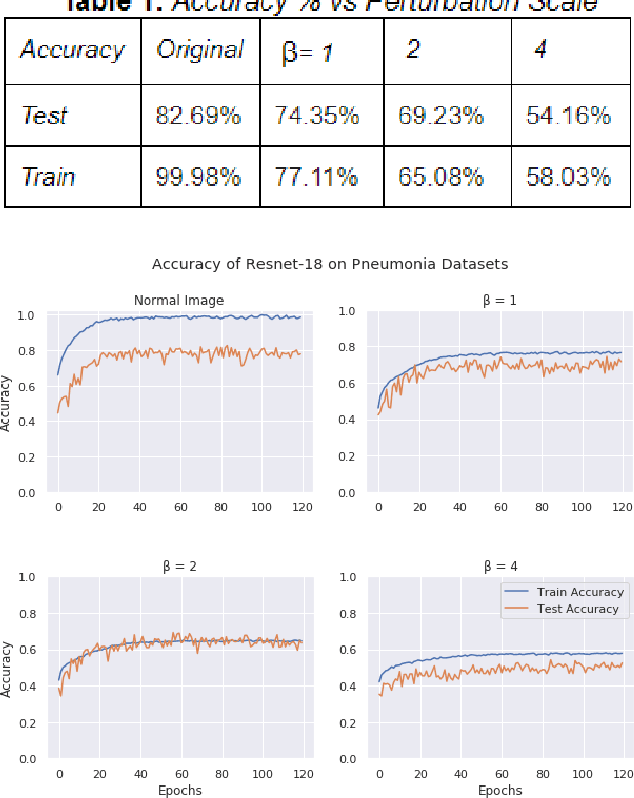

Hospitals and other medical institutions often have vast amounts of medical data which can provide significant value when utilized to advance research. However, this data is often sensitive in nature and as such is not readily available for use in a research setting, often due to privacy concerns. In this paper, we measure the performance of a deep neural network on differentially private image datasets pertaining to Pneumonia. We analyze the trade-off between the model's accuracy and the scale of perturbation among the images. Knowing how the model's accuracy varies among different perturbation levels in differentially private medical images can be quite a useful measure for hospitals to know. Furthermore, we also seek to measure the usefulness of local differential privacy for such medical imagery tasks and see if there's room for improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge