Benchmarking Deep Learning Classifiers: Beyond Accuracy

Paper and Code

Mar 02, 2021

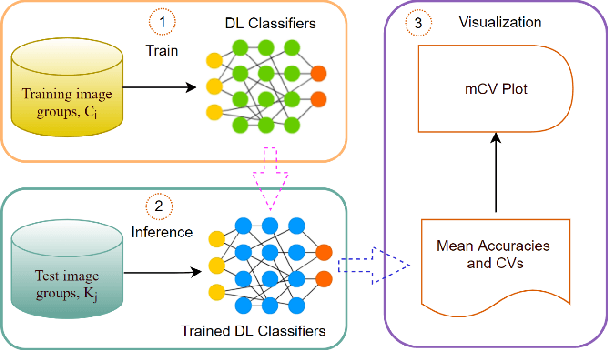

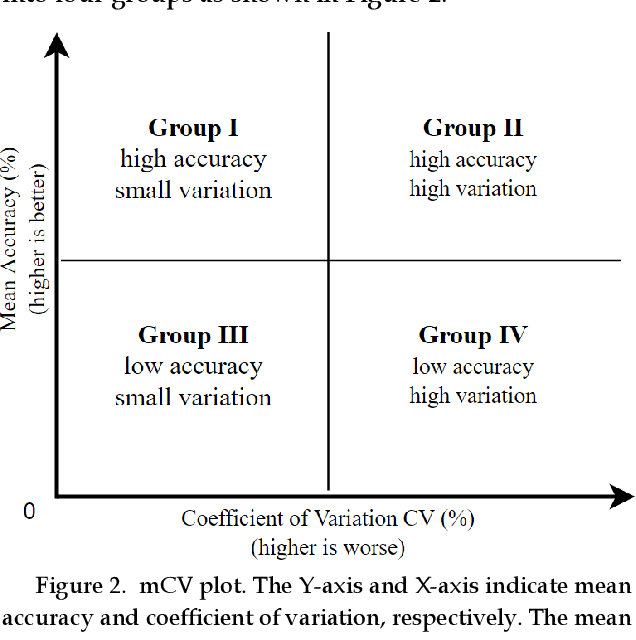

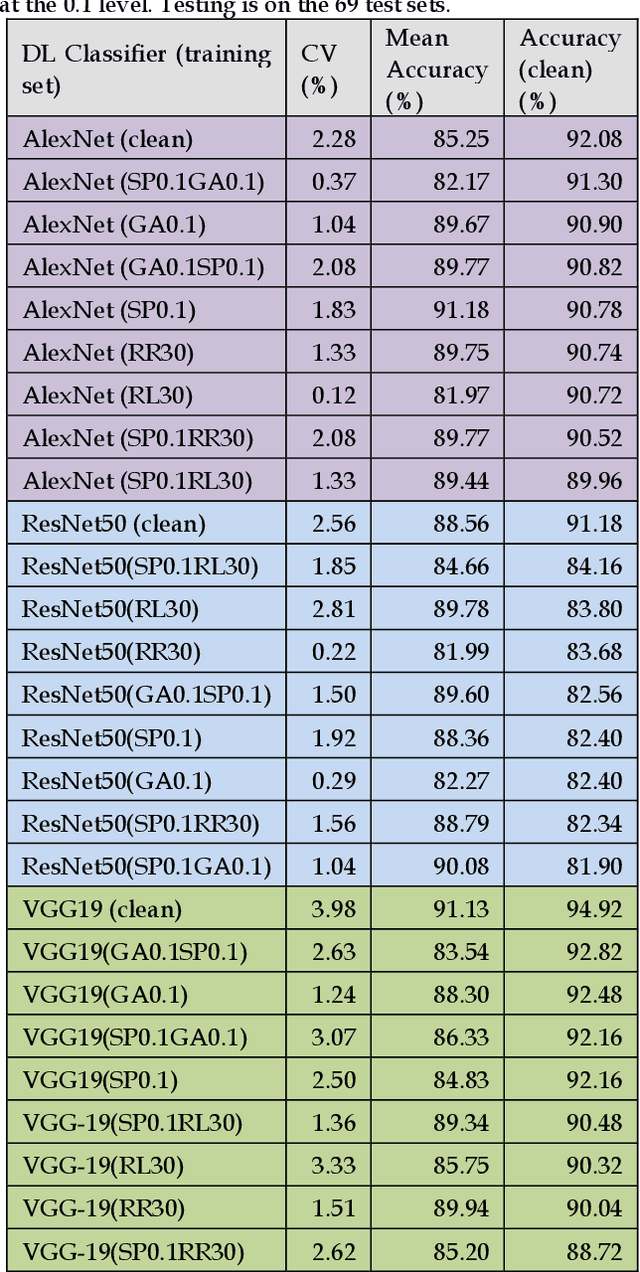

Previous research evaluating deep learning (DL) classifiers has often used top-1/top-5 accuracy. However, the accuracy of DL classifiers is unstable in that it often changes significantly when retested on imperfect or adversarial images. This paper adds to the small but fundamental body of work on benchmarking the robustness of DL classifiers on imperfect images by proposing a two-dimensional metric, consisting of mean accuracy and coefficient of variation, to measure the robustness of DL classifiers. Spearman's rank correlation coefficient and Pearson's correlation coefficient are used and their independence evaluated. A statistical plot we call mCV is presented which aims to help visualize the robustness of the performance of DL classifiers across varying amounts of imperfection in tested images. Finally, we demonstrate that defective images corrupted by two-factor corruption could be used to improve the robustness of DL classifiers. All source codes and related image sets are shared on a website (http://www.animpala.com) to support future research projects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge