Behavior Planning of Autonomous Cars with Social Perception

Paper and Code

May 02, 2019

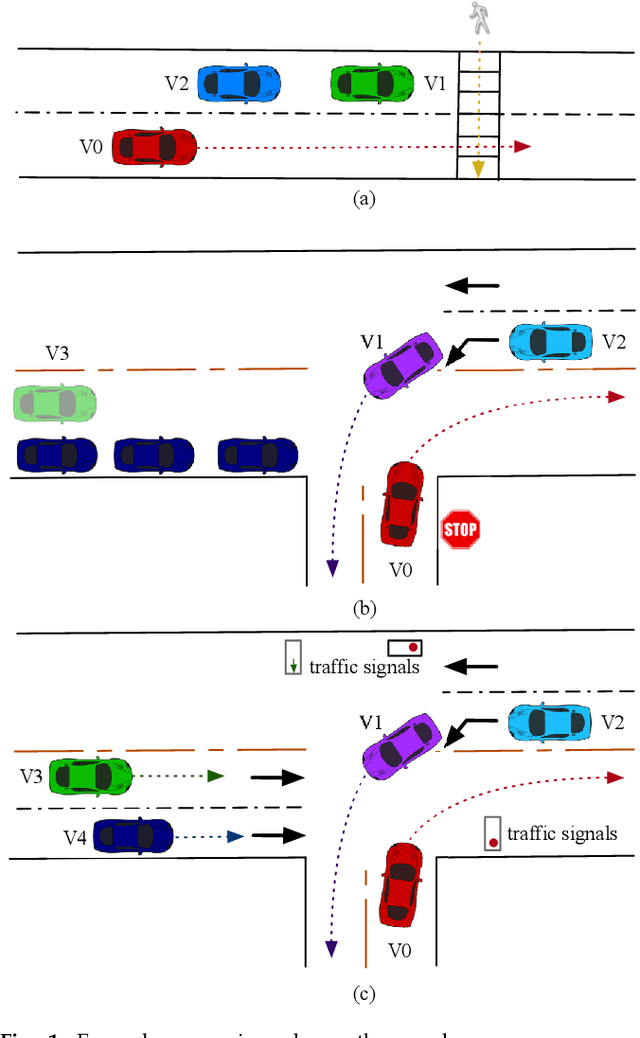

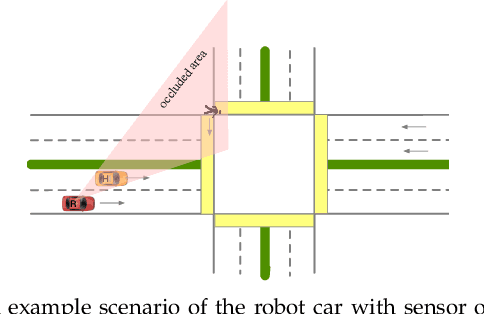

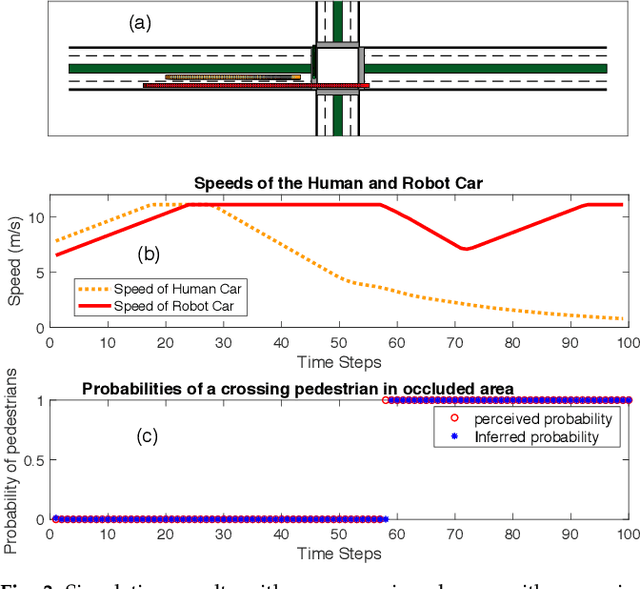

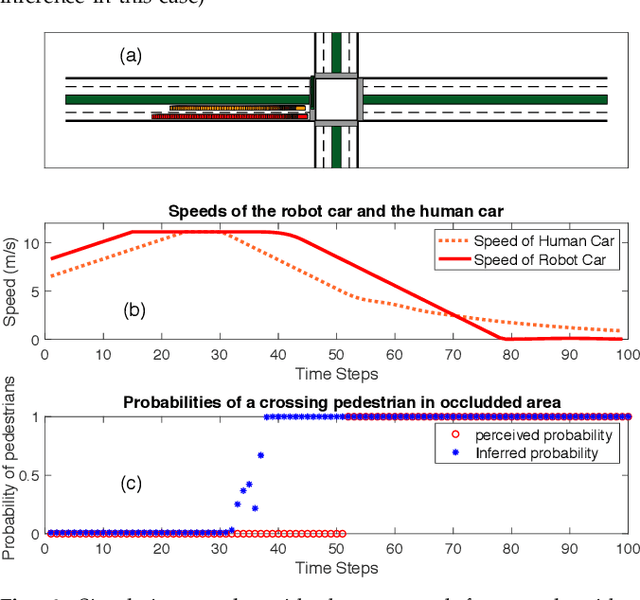

Autonomous cars have to navigate in dynamic environment which can be full of uncertainties. The uncertainties can come either from sensor limitations such as occlusions and limited sensor range, or from probabilistic prediction of other road participants, or from unknown social behavior in a new area. To safely and efficiently drive in the presence of these uncertainties, the decision-making and planning modules of autonomous cars should intelligently utilize all available information and appropriately tackle the uncertainties so that proper driving strategies can be generated. In this paper, we propose a social perception scheme which treats all road participants as distributed sensors in a sensor network. By observing the individual behaviors as well as the group behaviors, uncertainties of the three types can be updated uniformly in a belief space. The updated beliefs from the social perception are then explicitly incorporated into a probabilistic planning framework based on Model Predictive Control (MPC). The cost function of the MPC is learned via inverse reinforcement learning (IRL). Such an integrated probabilistic planning module with socially enhanced perception enables the autonomous vehicles to generate behaviors which are defensive but not overly conservative, and socially compatible. The effectiveness of the proposed framework is verified in simulation on an representative scenario with sensor occlusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge