Beam Search for Feature Selection

Paper and Code

Mar 08, 2022

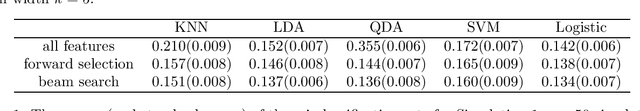

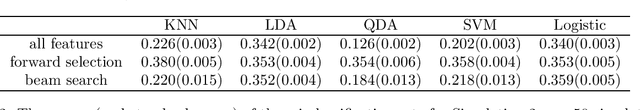

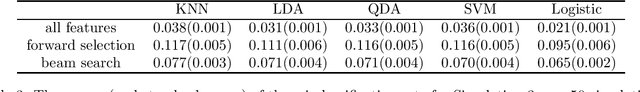

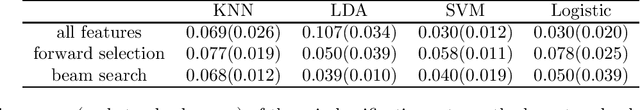

In this paper, we present and prove some consistency results about the performance of classification models using a subset of features. In addition, we propose to use beam search to perform feature selection, which can be viewed as a generalization of forward selection. We apply beam search to both simulated and real-world data, by evaluating and comparing the performance of different classification models using different sets of features. The results demonstrate that beam search could outperform forward selection, especially when the features are correlated so that they have more discriminative power when considered jointly than individually. Moreover, in some cases classification models could obtain comparable performance using only ten features selected by beam search instead of hundreds of original features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge