Bayesian Convolutional Neural Networks

Paper and Code

Sep 10, 2018

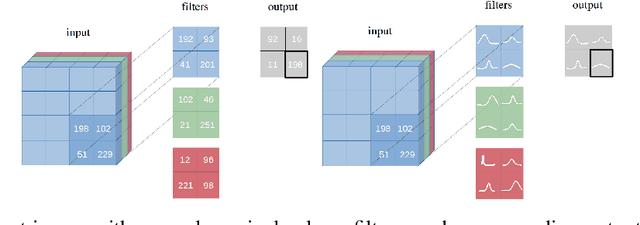

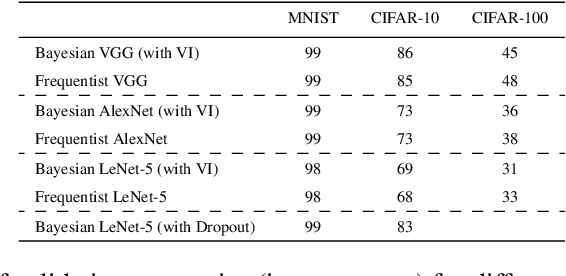

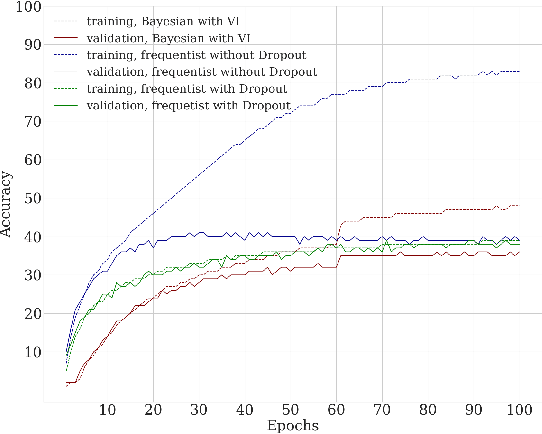

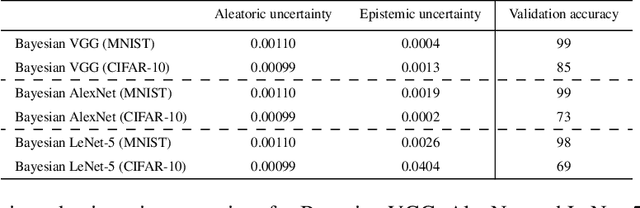

We introduce Bayesian Convolutional Neural Networks (BayesCNNs), a variant of Convolutional Neural Networks (CNNs) which is built upon Bayes by Backprop. We demonstrate how this novel reliable variational inference method can serve as a fundamental construct for various network architectures. On multiple datasets in supervised learning settings (MNIST, CIFAR-10, CIFAR-100, and STL-10), our proposed variational inference method achieves performances equivalent to frequentist inference in identical architectures, while a measurement for uncertainties and a regularisation are incorporated naturally. In the past, Bayes by Backprop has been successfully implemented in feedforward and recurrent neural networks, but not in convolutional ones. This work symbolises the extension of Bayesian neural networks which encompasses all three aforementioned types of network architectures now.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge