AX-DBN: An Approximate Computing Framework for the Design of Low-Power Discriminative Deep Belief Networks

Paper and Code

Mar 26, 2019

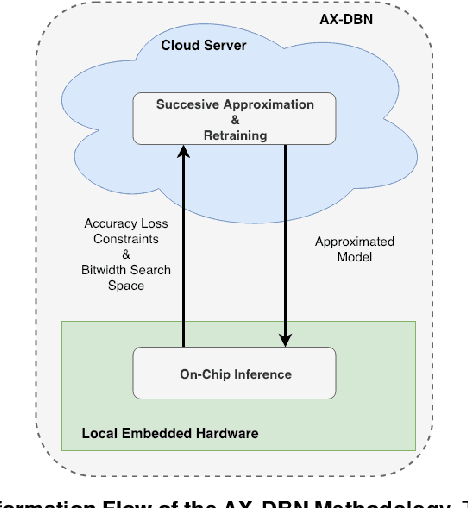

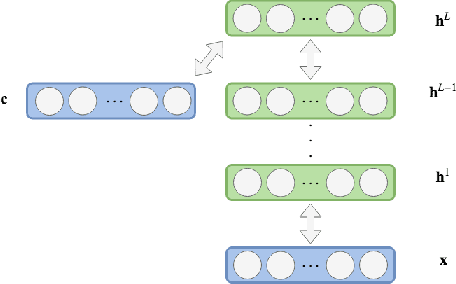

The power budget for embedded hardware implementations of Deep Learning algorithms can be extremely tight. To address implementation challenges in such domains, new design paradigms, like Approximate Computing, have drawn significant attention. Approximate Computing exploits the innate error-resilience of Deep Learning algorithms, a property that makes them amenable for deployment on low-power computing platforms. This paper describes an Approximate Computing design methodology, AX-DBN, for an architecture belonging to the class of stochastic Deep Learning algorithms known as Deep Belief Networks (DBNs). Specifically, we consider procedures for efficiently implementing the Discriminative Deep Belief Network (DDBN), a stochastic neural network which is used for classification tasks, extending Approximation Computing from the analysis of deterministic to stochastic neural networks. For the purpose of optimizing the DDBN for hardware implementations, we explore the use of: (a)Limited precision of neurons and functional approximations of activation functions; (b) Criticality analysis to identify nodes in the network which can operate at reduced precision while allowing the network to maintain target accuracy levels; and (c) A greedy search methodology with incremental retraining to determine the optimal reduction in precision for all neurons to maximize power savings. Using the AX-DBN methodology proposed in this paper, we present experimental results across several network architectures that show significant power savings under a user-specified accuracy loss constraint with respect to ideal full precision implementations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge