Automatic Synthesis of Diverse Weak Supervision Sources for Behavior Analysis

Paper and Code

Nov 30, 2021

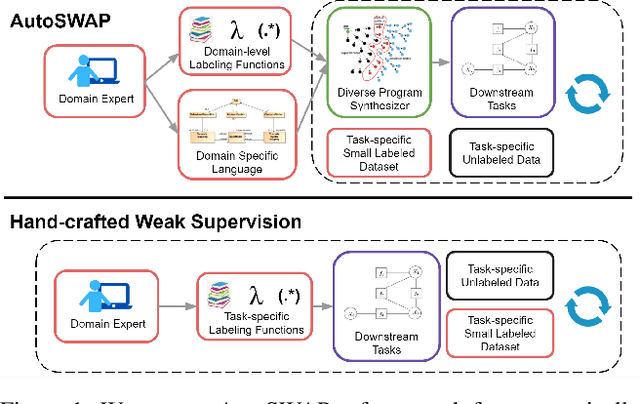

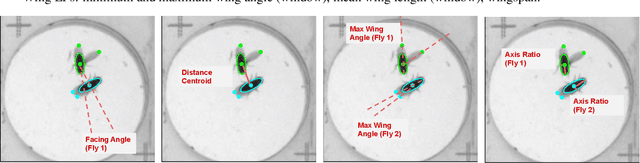

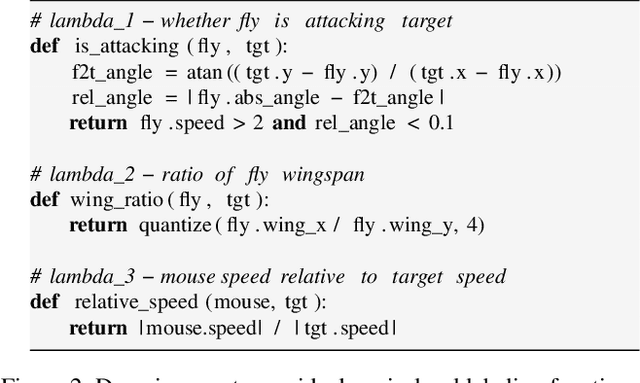

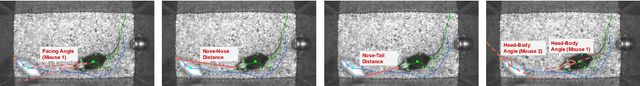

Obtaining annotations for large training sets is expensive, especially in behavior analysis settings where domain knowledge is required for accurate annotations. Weak supervision has been studied to reduce annotation costs by using weak labels from task-level labeling functions to augment ground truth labels. However, domain experts are still needed to hand-craft labeling functions for every studied task. To reduce expert effort, we present AutoSWAP: a framework for automatically synthesizing data-efficient task-level labeling functions. The key to our approach is to efficiently represent expert knowledge in a reusable domain specific language and domain-level labeling functions, with which we use state-of-the-art program synthesis techniques and a small labeled dataset to generate labeling functions. Additionally, we propose a novel structural diversity cost that allows for direct synthesis of diverse sets of labeling functions with minimal overhead, further improving labeling function data efficiency. We evaluate AutoSWAP in three behavior analysis domains and demonstrate that AutoSWAP outperforms existing approaches using only a fraction of the data. Our results suggest that AutoSWAP is an effective way to automatically generate labeling functions that can significantly reduce expert effort for behavior analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge