Automated Synchronization of Driving Data Using Vibration and Steering Events

Paper and Code

Mar 01, 2016

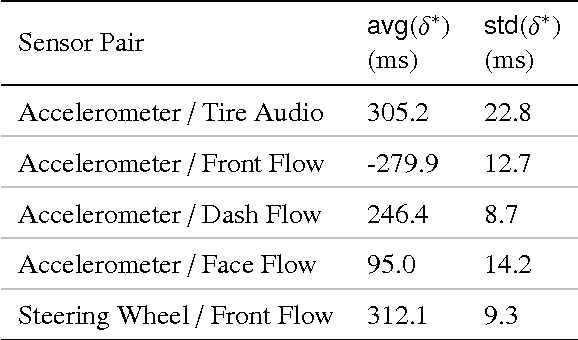

We propose a method for automated synchronization of vehicle sensors useful for the study of multi-modal driver behavior and for the design of advanced driver assistance systems. Multi-sensor decision fusion relies on synchronized data streams in (1) the offline supervised learning context and (2) the online prediction context. In practice, such data streams are often out of sync due to the absence of a real-time clock, use of multiple recording devices, or improper thread scheduling and data buffer management. Cross-correlation of accelerometer, telemetry, audio, and dense optical flow from three video sensors is used to achieve an average synchronization error of 13 milliseconds. The insight underlying the effectiveness of the proposed approach is that the described sensors capture overlapping aspects of vehicle vibrations and vehicle steering allowing the cross-correlation function to serve as a way to compute the delay shift in each sensor. Furthermore, we show the decrease in synchronization error as a function of the duration of the data stream.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge