AutoEG: Automated Experience Grafting for Off-Policy Deep Reinforcement Learning

Paper and Code

Apr 23, 2020

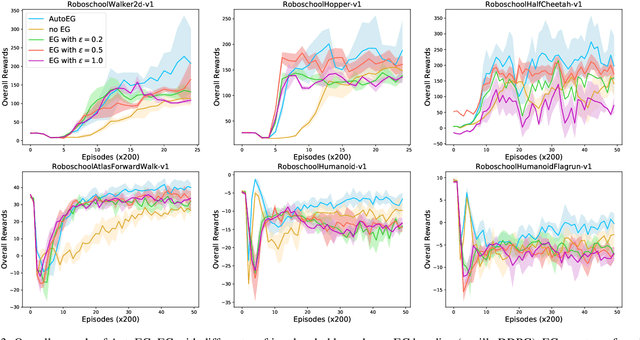

Deep reinforcement learning (RL) algorithms frequently require prohibitive interaction experience to ensure the quality of learned policies. The limitation is partly because the agent cannot learn much from the many low-quality trials in early learning phase, which results in low learning rate. Focusing on addressing this limitation, this paper makes a twofold contribution. First, we develop an algorithm, called Experience Grafting (EG), to enable RL agents to reorganize segments of the few high-quality trajectories from the experience pool to generate many synthetic trajectories while retaining the quality. Second, building on EG, we further develop an AutoEG agent that automatically learns to adjust the grafting-based learning strategy. Results collected from a set of six robotic control environments show that, in comparison to a standard deep RL algorithm (DDPG), AutoEG increases the speed of learning process by at least 30%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge