AutoDIME: Automatic Design of Interesting Multi-Agent Environments

Paper and Code

Mar 04, 2022

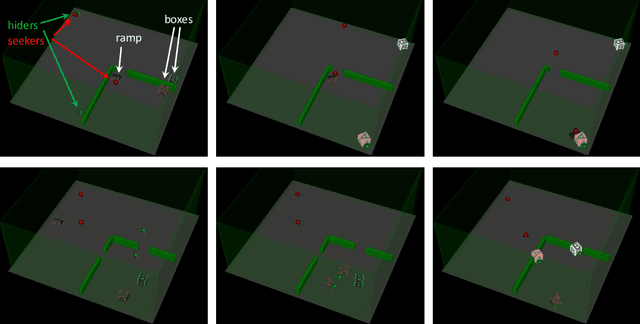

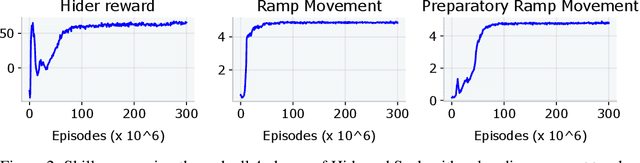

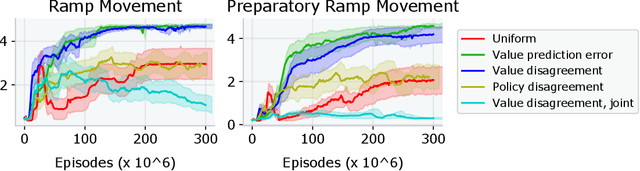

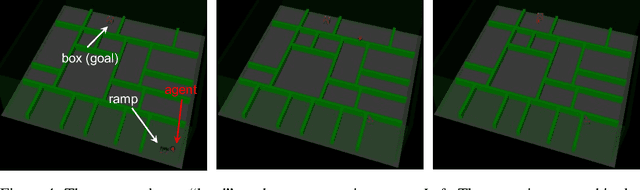

Designing a distribution of environments in which RL agents can learn interesting and useful skills is a challenging and poorly understood task, for multi-agent environments the difficulties are only exacerbated. One approach is to train a second RL agent, called a teacher, who samples environments that are conducive for the learning of student agents. However, most previous proposals for teacher rewards do not generalize straightforwardly to the multi-agent setting. We examine a set of intrinsic teacher rewards derived from prediction problems that can be applied in multi-agent settings and evaluate them in Mujoco tasks such as multi-agent Hide and Seek as well as a diagnostic single-agent maze task. Of the intrinsic rewards considered we found value disagreement to be most consistent across tasks, leading to faster and more reliable emergence of advanced skills in Hide and Seek and the maze task. Another candidate intrinsic reward considered, value prediction error, also worked well in Hide and Seek but was susceptible to noisy-TV style distractions in stochastic environments. Policy disagreement performed well in the maze task but did not speed up learning in Hide and Seek. Our results suggest that intrinsic teacher rewards, and in particular value disagreement, are a promising approach for automating both single and multi-agent environment design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge