Attentive Prototypes for Source-free Unsupervised Domain Adaptive 3D Object Detection

Paper and Code

Dec 01, 2021

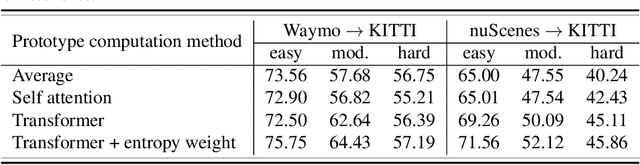

3D object detection networks tend to be biased towards the data they are trained on. Evaluation on datasets captured in different locations, conditions or sensors than that of the training (source) data results in a drop in model performance due to the gap in distribution with the test (or target) data. Current methods for domain adaptation either assume access to source data during training, which may not be available due to privacy or memory concerns, or require a sequence of lidar frames as an input. We propose a single-frame approach for source-free, unsupervised domain adaptation of lidar-based 3D object detectors that uses class prototypes to mitigate the effect pseudo-label noise. Addressing the limitations of traditional feature aggregation methods for prototype computation in the presence of noisy labels, we utilize a transformer module to identify outlier ROI's that correspond to incorrect, over-confident annotations, and compute an attentive class prototype. Under an iterative training strategy, the losses associated with noisy pseudo labels are down-weighed and thus refined in the process of self-training. To validate the effectiveness of our proposed approach, we examine the domain shift associated with networks trained on large, label-rich datasets (such as the Waymo Open Dataset and nuScenes) and evaluate on smaller, label-poor datasets (such as KITTI) and vice-versa. We demonstrate our approach on two recent object detectors and achieve results that out-perform the other domain adaptation works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge