Attention Is Not What You Need: Revisiting Multi-Instance Learning for Whole Slide Image Classification

Paper and Code

Aug 18, 2024

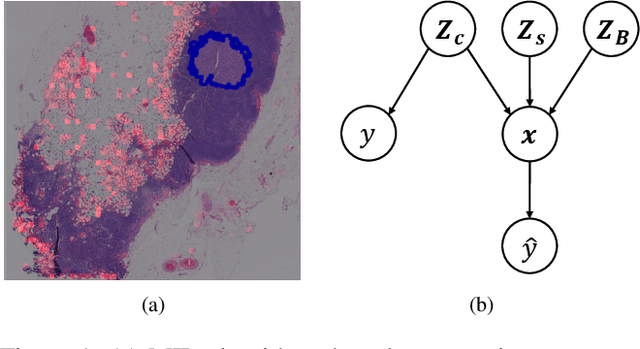

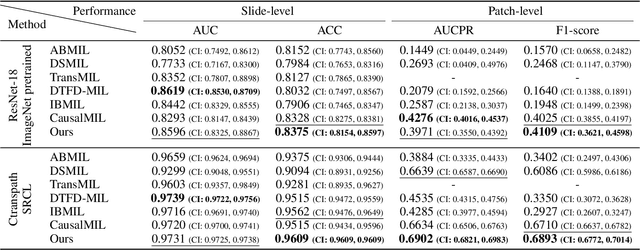

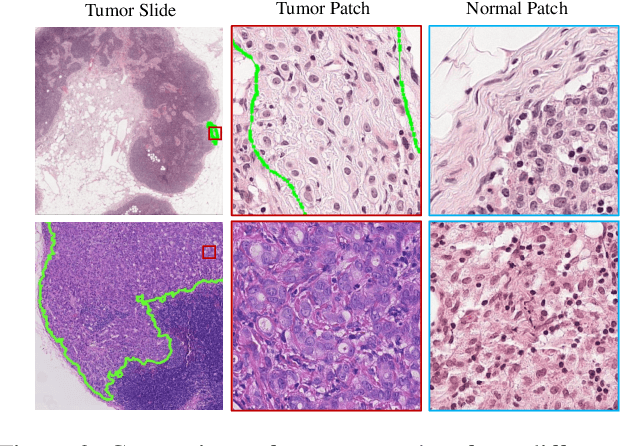

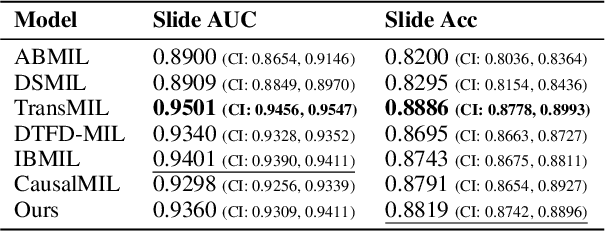

Although attention-based multi-instance learning algorithms have achieved impressive performances on slide-level whole slide image (WSI) classification tasks, they are prone to mistakenly focus on irrelevant patterns such as staining conditions and tissue morphology, leading to incorrect patch-level predictions and unreliable interpretability. Moreover, these attention-based MIL algorithms tend to focus on salient instances and struggle to recognize hard-to-classify instances. In this paper, we first demonstrate that attention-based WSI classification methods do not adhere to the standard MIL assumptions. From the standard MIL assumptions, we propose a surprisingly simple yet effective instance-based MIL method for WSI classification (FocusMIL) based on max-pooling and forward amortized variational inference. We argue that synergizing the standard MIL assumption with variational inference encourages the model to focus on tumour morphology instead of spurious correlations. Our experimental evaluations show that FocusMIL significantly outperforms the baselines in patch-level classification tasks on the Camelyon16 and TCGA-NSCLC benchmarks. Visualization results show that our method also achieves better classification boundaries for identifying hard instances and mitigates the effect of spurious correlations between bags and labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge