Attention-Based Planning with Active Perception

Paper and Code

Nov 30, 2020

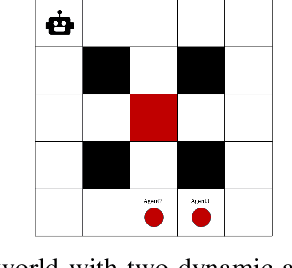

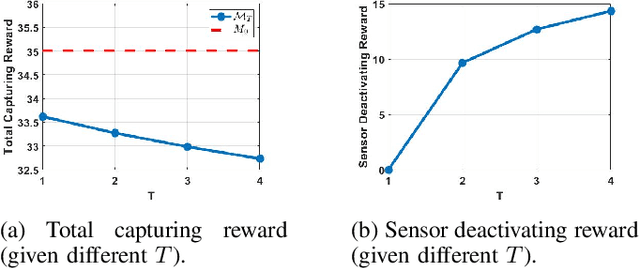

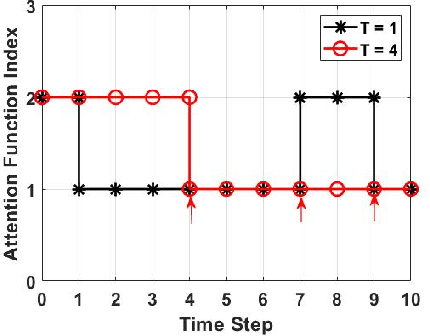

Attention control is a key cognitive ability for humans to select information relevant to the current task. This paper develops a computational model of attention and an algorithm for attention-based probabilistic planning in Markov decision processes. In attention-based planning, the robot decides to be in different attention modes. An attention mode corresponds to a subset of state variables monitored by the robot. By switching between different attention modes, the robot actively perceives task-relevant information to reduce the cost of information acquisition and processing, while achieving near-optimal task performance. Though planning with attention-based active perception inevitably introduces partial observations, a partially observable MDP formulation makes the problem computational expensive to solve. Instead, our proposed method employs a hierarchical planning framework in which the robot determines what to pay attention to and for how long the attention should be sustained before shifting to other information sources. During the attention sustaining phase, the robot carries out a sub-policy, computed from an abstraction of the original MDP given the current attention. We use an example where a robot is tasked to capture a set of intruders in a stochastic gridworld. The experimental results show that the proposed method enables information- and computation-efficient optimal planning in stochastic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge