Attention Based Feature Fusion For Multi-Agent Collaborative Perception

Paper and Code

May 03, 2023

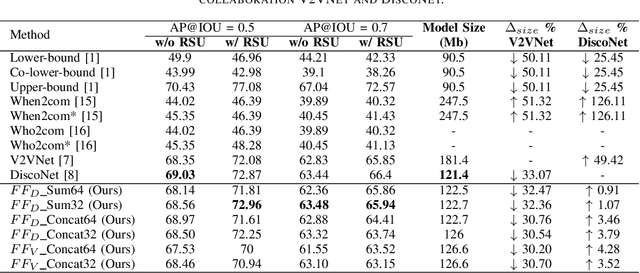

In the domain of intelligent transportation systems (ITS), collaborative perception has emerged as a promising approach to overcome the limitations of individual perception by enabling multiple agents to exchange information, thus enhancing their situational awareness. Collaborative perception overcomes the limitations of individual sensors, allowing connected agents to perceive environments beyond their line-of-sight and field of view. However, the reliability of collaborative perception heavily depends on the data aggregation strategy and communication bandwidth, which must overcome the challenges posed by limited network resources. To improve the precision of object detection and alleviate limited network resources, we propose an intermediate collaborative perception solution in the form of a graph attention network (GAT). The proposed approach develops an attention-based aggregation strategy to fuse intermediate representations exchanged among multiple connected agents. This approach adaptively highlights important regions in the intermediate feature maps at both the channel and spatial levels, resulting in improved object detection precision. We propose a feature fusion scheme using attention-based architectures and evaluate the results quantitatively in comparison to other state-of-the-art collaborative perception approaches. Our proposed approach is validated using the V2XSim dataset. The results of this work demonstrate the efficacy of the proposed approach for intermediate collaborative perception in improving object detection average precision while reducing network resource usage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge