Assessing the State of Self-Supervised Human Activity Recognition using Wearables

Paper and Code

Feb 22, 2022

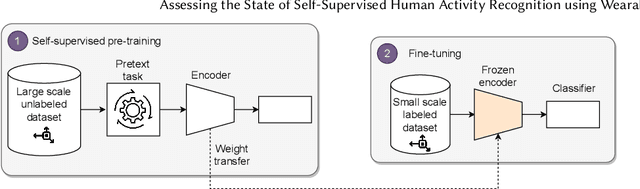

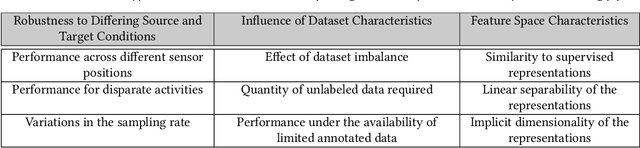

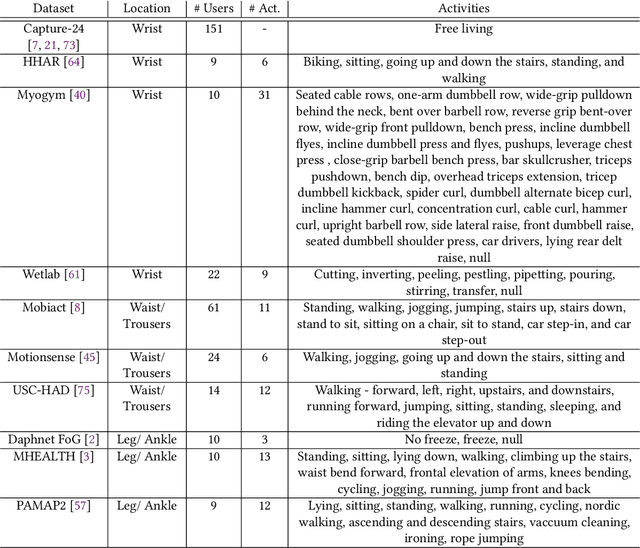

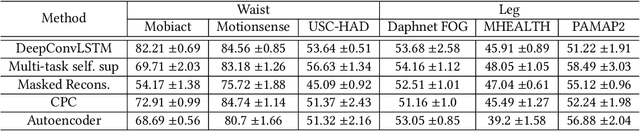

The emergence of self-supervised learning in the field of wearables-based human activity recognition (HAR) has opened up opportunities to tackle the most pressing challenges in the field, namely to exploit unlabeled data to derive reliable recognition systems from only small amounts of labeled training samples. Furthermore, self-supervised methods enable a host of new application domains such as, for example, domain adaptation and transfer across sensor positions, activities etc. As such, self-supervision, i.e., the paradigm of 'pretrain-then-finetune' has the potential to become a strong alternative to the predominant end-to-end training approaches, let alone the classic activity recognition chain with hand-crafted features of sensor data. Recently a number of contributions have been made that introduced self-supervised learning into the field of HAR, including, Multi-task self-supervision, Masked Reconstruction, CPC to name but a few. With the initial success of these methods, the time has come for a systematic inventory and analysis of the potential self-supervised learning has for the field. This paper provides exactly that. We assess the progress of self-supervised HAR research by introducing a framework that performs a multi-faceted exploration of model performance. We organize the framework into three dimensions, each containing three constituent criteria, and utilize it to assess state-of-the-art self-supervised learning methods in a large empirical study on a curated set of nine diverse benchmarks. This exploration leads us to the formulation of insights into the properties of these techniques and to establish their value towards learning representations for diverse scenarios. Based on our findings we call upon the community to join our efforts and to contribute towards shaping the evaluation of the ongoing paradigm change in modeling human activities from body-worn sensor data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge