Are Visual Explanations Useful? A Case Study in Model-in-the-Loop Prediction

Paper and Code

Jul 23, 2020

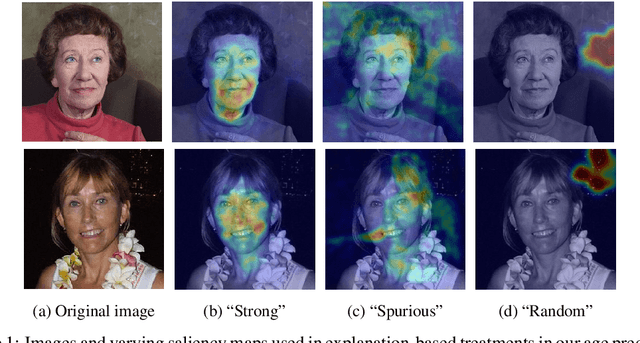

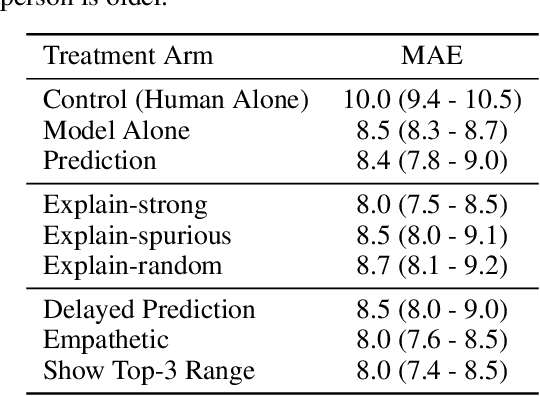

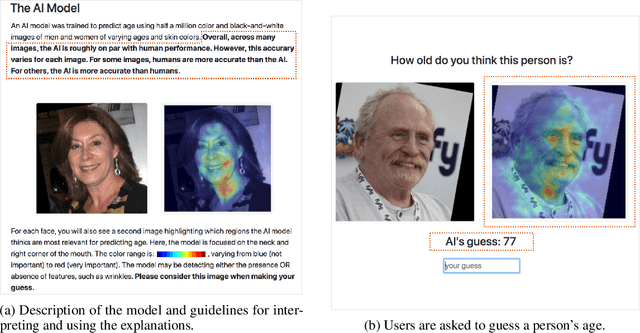

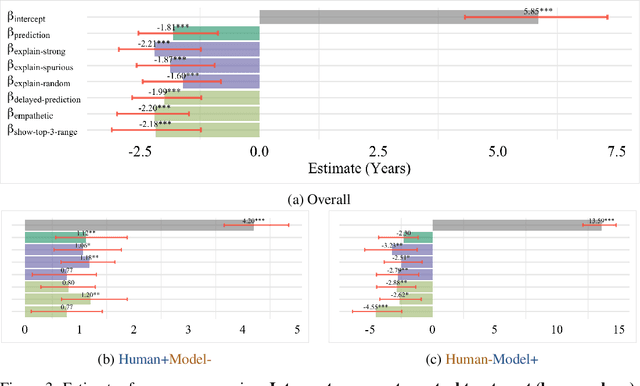

We present a randomized controlled trial for a model-in-the-loop regression task, with the goal of measuring the extent to which (1) good explanations of model predictions increase human accuracy, and (2) faulty explanations decrease human trust in the model. We study explanations based on visual saliency in an image-based age prediction task for which humans and learned models are individually capable but not highly proficient and frequently disagree. Our experimental design separates model quality from explanation quality, and makes it possible to compare treatments involving a variety of explanations of varying levels of quality. We find that presenting model predictions improves human accuracy. However, visual explanations of various kinds fail to significantly alter human accuracy or trust in the model - regardless of whether explanations characterize an accurate model, an inaccurate one, or are generated randomly and independently of the input image. These findings suggest the need for greater evaluation of explanations in downstream decision making tasks, better design-based tools for presenting explanations to users, and better approaches for generating explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge