Approximated Infomax Early Stopping: Revisiting Gaussian RBMs on Natural Images

Paper and Code

Jan 06, 2014

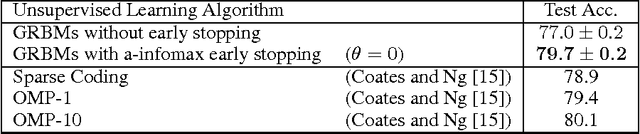

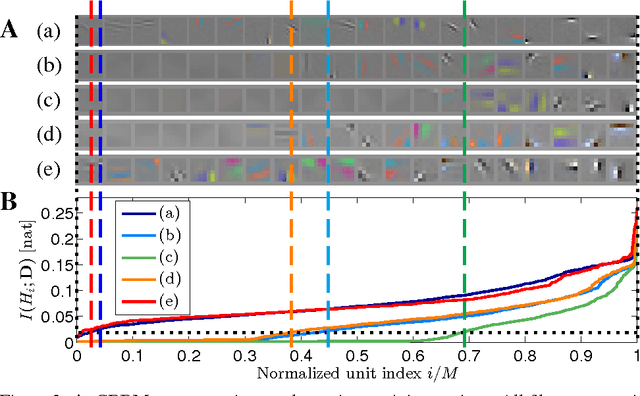

We pursue an early stopping technique that helps Gaussian Restricted Boltzmann Machines (GRBMs) to gain good natural image representations in terms of overcompleteness and data fitting. GRBMs are widely considered as an unsuitable model for natural images because they gain non-overcomplete representations which include uniform filters that do not represent useful image features. We have recently found that GRBMs once gain and subsequently lose useful filters during their training, contrary to this common perspective. We attribute this phenomenon to a tradeoff between overcompleteness of GRBM representations and data fitting. To gain GRBM representations that are overcomplete and fit data well, we propose a measure for GRBM representation quality, approximated mutual information, and an early stopping technique based on this measure. The proposed method boosts performance of classifiers trained on GRBM representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge