Anticipating Oblivious Opponents in Stochastic Games

Paper and Code

Sep 18, 2024

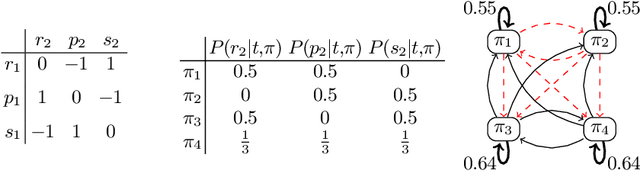

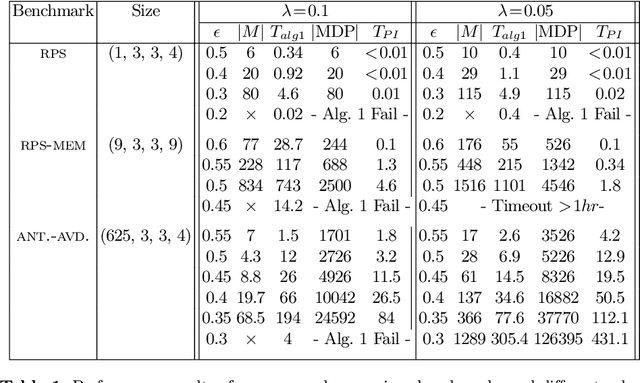

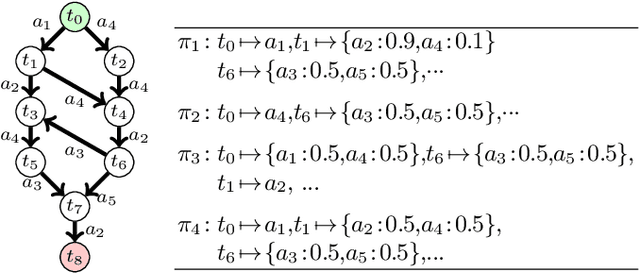

We present an approach for systematically anticipating the actions and policies employed by \emph{oblivious} environments in concurrent stochastic games, while maximizing a reward function. Our main contribution lies in the synthesis of a finite \emph{information state machine} whose alphabet ranges over the actions of the environment. Each state of the automaton is mapped to a belief state about the policy used by the environment. We introduce a notion of consistency that guarantees that the belief states tracked by our automaton stays within a fixed distance of the precise belief state obtained by knowledge of the full history. We provide methods for checking consistency of an automaton and a synthesis approach which upon successful termination yields such a machine. We show how the information state machine yields an MDP that serves as the starting point for computing optimal policies for maximizing a reward function defined over plays. We present an experimental evaluation over benchmark examples including human activity data for tasks such as cataract surgery and furniture assembly, wherein our approach successfully anticipates the policies and actions of the environment in order to maximize the reward.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge