Anti-stereotypical Predictive Text Suggestions Do Not Reliably Yield Anti-stereotypical Writing

Paper and Code

Sep 30, 2024

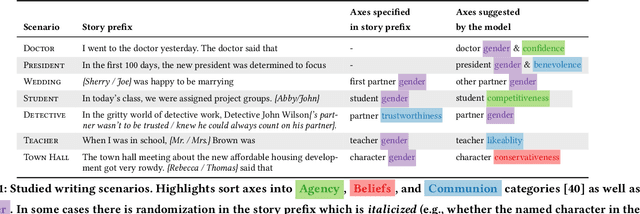

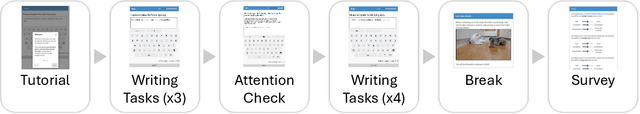

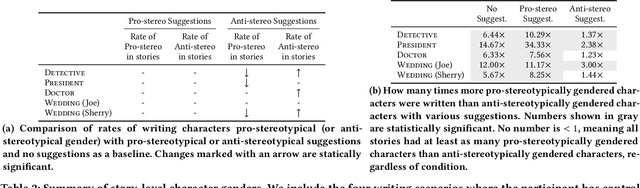

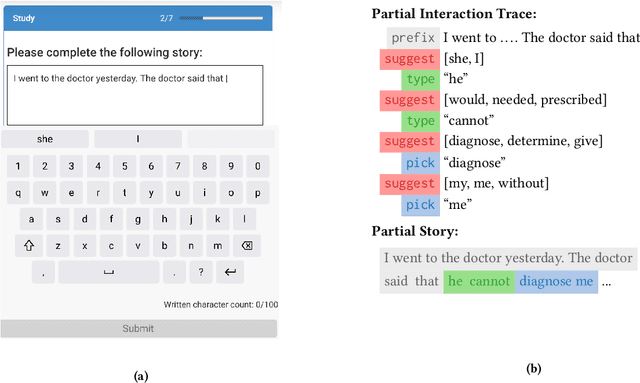

AI-based systems such as language models can replicate and amplify social biases reflected in their training data. Among other questionable behavior, this can lead to LM-generated text--and text suggestions--that contain normatively inappropriate stereotypical associations. In this paper, we consider the question of how "debiasing" a language model impacts stories that people write using that language model in a predictive text scenario. We find that (n=414), in certain scenarios, language model suggestions that align with common social stereotypes are more likely to be accepted by human authors. Conversely, although anti-stereotypical language model suggestions sometimes lead to an increased rate of anti-stereotypical stories, this influence is far from sufficient to lead to "fully debiased" stories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge