Answering Questions in Stages: Prompt Chaining for Contract QA

Paper and Code

Oct 09, 2024

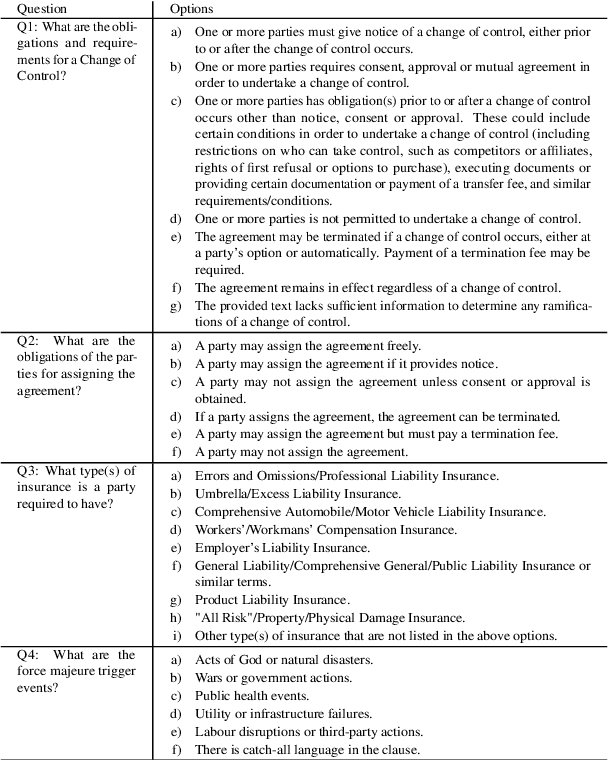

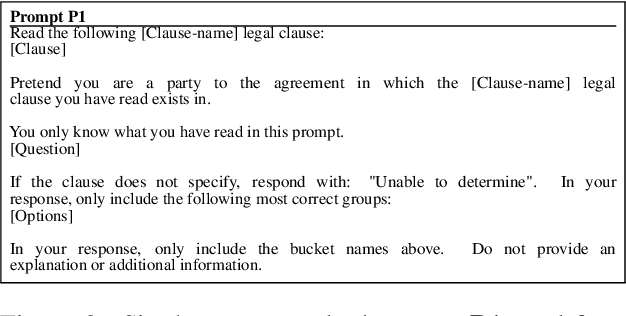

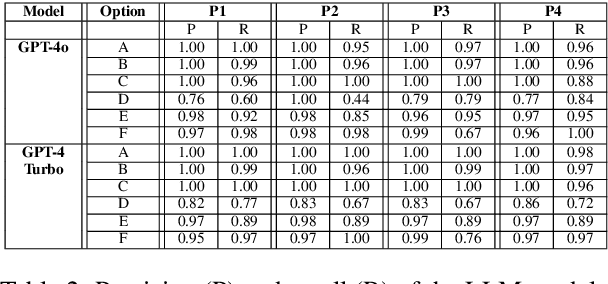

Finding answers to legal questions about clauses in contracts is an important form of analysis in many legal workflows (e.g., understanding market trends, due diligence, risk mitigation) but more important is being able to do this at scale. Prior work showed that it is possible to use large language models with simple zero-shot prompts to generate structured answers to questions, which can later be incorporated into legal workflows. Such prompts, while effective on simple and straightforward clauses, fail to perform when the clauses are long and contain information not relevant to the question. In this paper, we propose two-stage prompt chaining to produce structured answers to multiple-choice and multiple-select questions and show that they are more effective than simple prompts on more nuanced legal text. We analyze situations where this technique works well and areas where further refinement is needed, especially when the underlying linguistic variations are more than can be captured by simply specifying possible answers. Finally, we discuss future research that seeks to refine this work by improving stage one results by making them more question-specific.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge