ANI-1: A data set of 20M off-equilibrium DFT calculations for organic molecules

Paper and Code

Dec 12, 2017

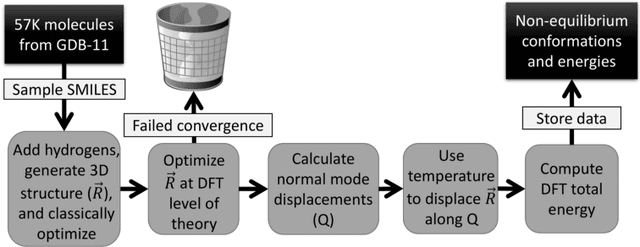

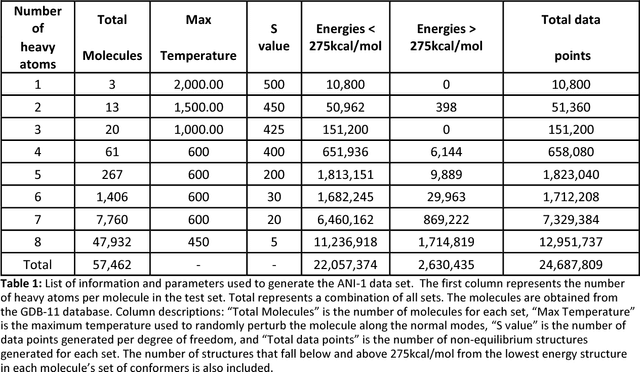

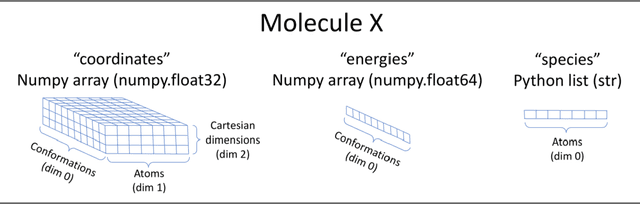

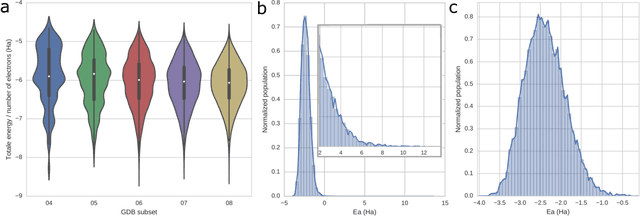

One of the grand challenges in modern theoretical chemistry is designing and implementing approximations that expedite ab initio methods without loss of accuracy. Machine learning (ML), in particular neural networks, are emerging as a powerful approach to constructing various forms of transferable atomistic potentials. They have been successfully applied in a variety of applications in chemistry, biology, catalysis, and solid-state physics. However, these models are heavily dependent on the quality and quantity of data used in their fitting. Fitting highly flexible ML potentials comes at a cost: a vast amount of reference data is required to properly train these models. We address this need by providing access to a large computational DFT database, which consists of 20M conformations for 57,454 small organic molecules. We believe it will become a new standard benchmark for comparison of current and future methods in the ML potential community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge