An Ontology-Aware Framework for Audio Event Classification

Paper and Code

Jan 27, 2020

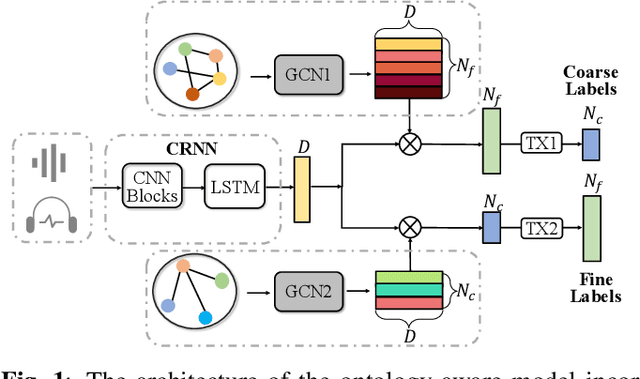

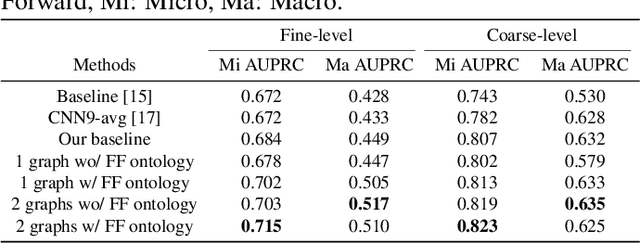

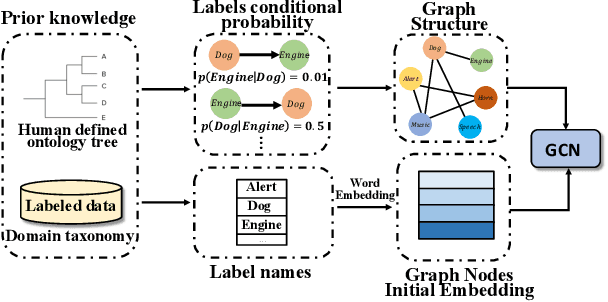

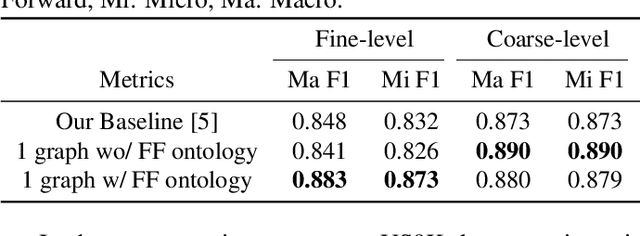

Recent advancements in audio event classification often ignore the structure and relation between the label classes available as prior information. This structure can be defined by ontology and augmented in the classifier as a form of domain knowledge. To capture such dependencies between the labels, we propose an ontology-aware neural network containing two components: feed-forward ontology layers and graph convolutional networks (GCN). The feed-forward ontology layers capture the intra-dependencies of labels between different levels of ontology. On the other hand, GCN mainly models inter-dependency structure of labels within an ontology level. The framework is evaluated on two benchmark datasets for single-label and multi-label audio event classification tasks. The results demonstrate the proposed solutions efficacy to capture and explore the ontology relations and improve the classification performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge