An L1 Representer Theorem for Multiple-Kernel Regression

Paper and Code

Nov 02, 2018

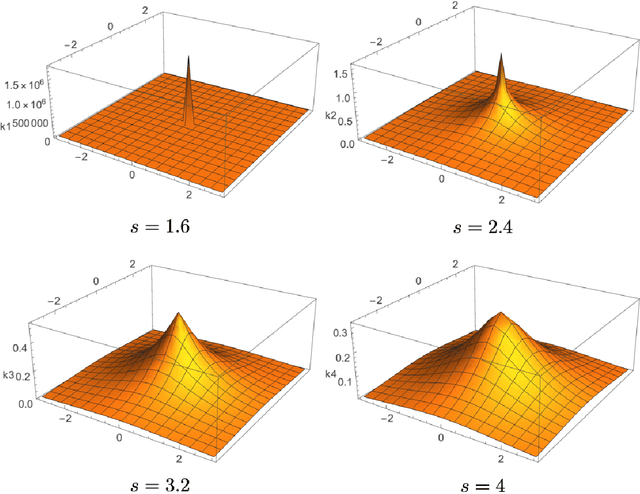

The theory of RKHS provides an elegant framework for supervised learning. It is the foundation of all kernel methods in machine learning. Implicit in its formulation is the use of a quadratic regularizer associated with the underlying inner product which imposes smoothness constraints. In this paper, we consider instead the generalized total-variation (gTV) norm as the sparsity-promoting regularizer. This leads us to propose a new Banach-space framework that justifies the use of generalized LASSO, albeit in a slightly modified version. We prove a representer theorem for multiple-kernel regression (MKR) with gTV regularization. The theorem states that the solutions of MKR have kernel expansions with adaptive positions, while the gTV norm enforces an $\ell_1$ penalty on the coefficients. We discuss the sparsity-promoting effect of the gTV norm which prevents redundancy in the multiple-kernel scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge