An Information-theoretic Progressive Framework for Interpretation

Paper and Code

Jan 08, 2021

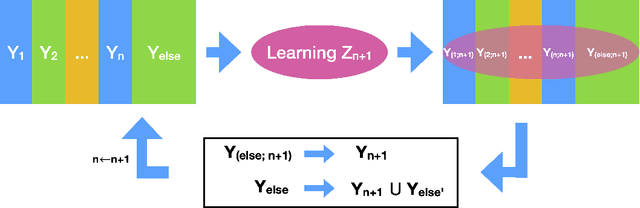

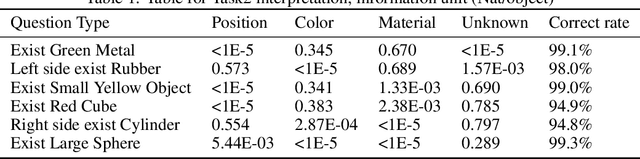

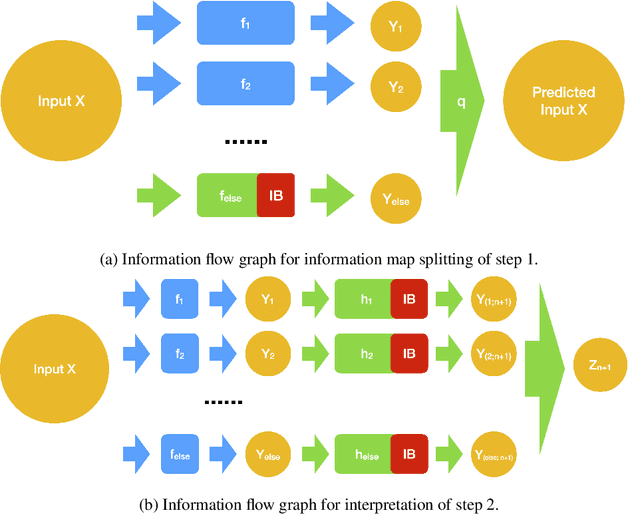

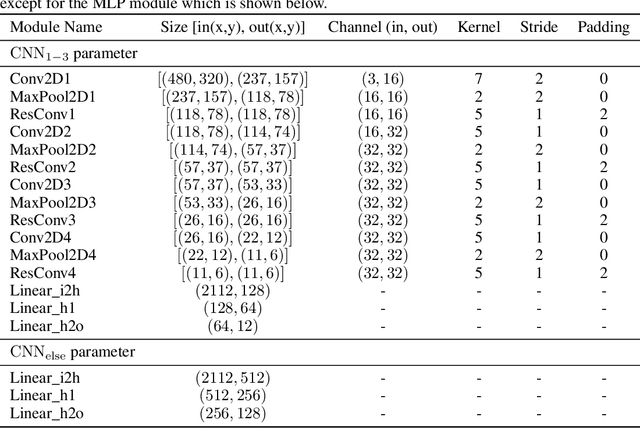

Both brain science and the deep learning communities have the problem of interpreting neural activity. For deep learning, even though we can access all neurons' activity data, interpretation of how the deep network solves the task is still challenging. Although a large amount of effort has been devoted to interpreting a deep network, there is still no consensus of what interpretation is. This paper tries to push the discussion in this direction and proposes an information-theoretic progressive framework to synthesize interpretation. Firstly, we discuss intuitions of interpretation: interpretation is meta-information; interpretation should be at the right level; inducing independence is helpful to interpretation; interpretation is naturally progressive; interpretation doesn't have to involve a human. Then, we build the framework with an information map splitting idea and implement it with the variational information bottleneck technique. After that, we test the framework with the CLEVR dataset. The framework is shown to be able to split information maps and synthesize interpretation in the form of meta-information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge