An Exact Reformulation of Feature-Vector-based Radial-Basis-Function Networks for Graph-based Observations

Paper and Code

Jan 22, 2019

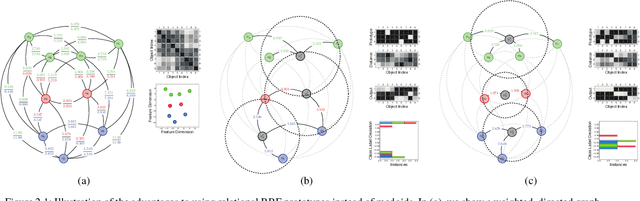

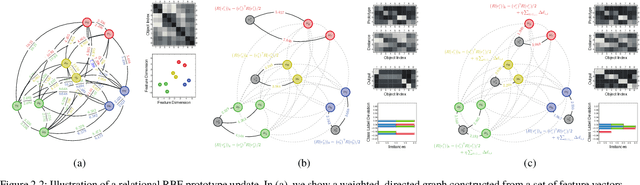

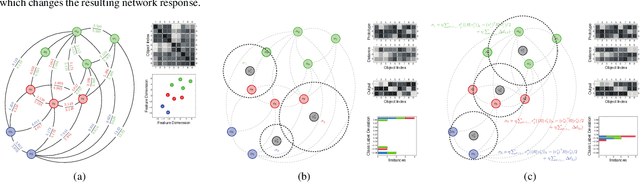

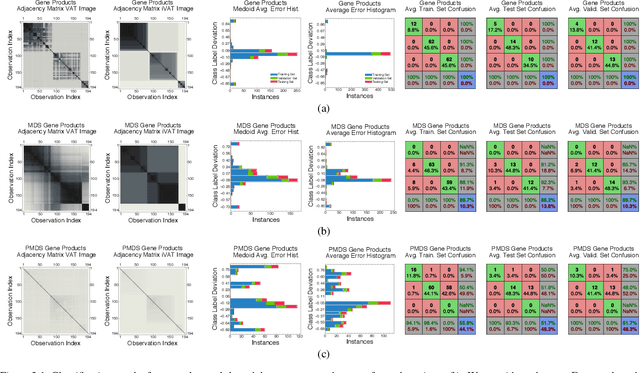

Radial-basis-function networks are traditionally defined for sets of vector-based observations. In this short paper, we reformulate such networks so that they can be applied to adjacency-matrix representations of weighted, directed graphs that represent the relationships between object pairs. We re-state the sum-of-squares objective function so that it is purely dependent on entries from the adjacency matrix. From this objective function, we derive a gradient descent update for the network weights. We also derive a gradient update that simulates the repositioning of the radial basis prototypes and changes in the radial basis prototype parameters. An important property of our radial basis function networks is that they are guaranteed to yield the same responses as conventional radial-basis networks trained on a corresponding vector realization of the relationships encoded by the adjacency-matrix. Such a vector realization only needs to provably exist for this property to hold, which occurs whenever the relationships correspond to distances from some arbitrary metric applied to a latent set of vectors. We therefore completely avoid needing to actually construct vectorial realizations via multi-dimensional scaling, which ensures that the underlying relationships are totally preserved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge