An Evaluation of OCR on Egocentric Data

Paper and Code

Jun 11, 2022

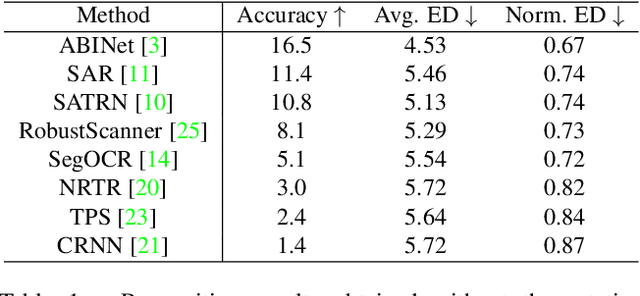

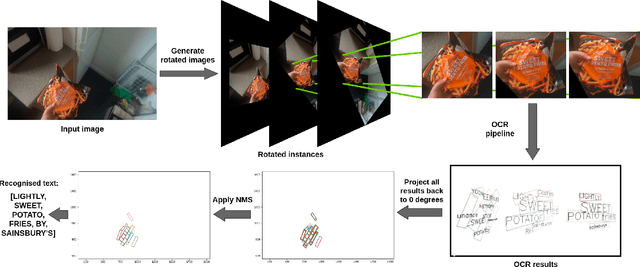

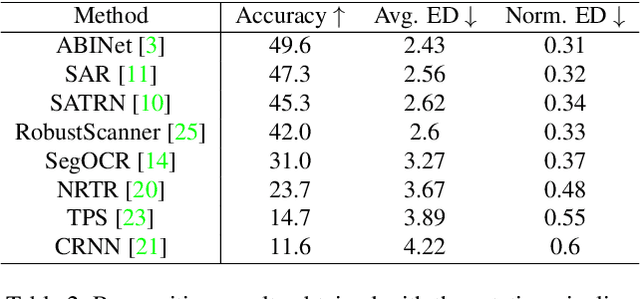

In this paper, we evaluate state-of-the-art OCR methods on Egocentric data. We annotate text in EPIC-KITCHENS images, and demonstrate that existing OCR methods struggle with rotated text, which is frequently observed on objects being handled. We introduce a simple rotate-and-merge procedure which can be applied to pre-trained OCR models that halves the normalized edit distance error. This suggests that future OCR attempts should incorporate rotation into model design and training procedures.

* Extended Abstract, EPIC workshop at CVPR 22

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge