An Evaluation of Change Point Detection Algorithms

Paper and Code

Mar 13, 2020

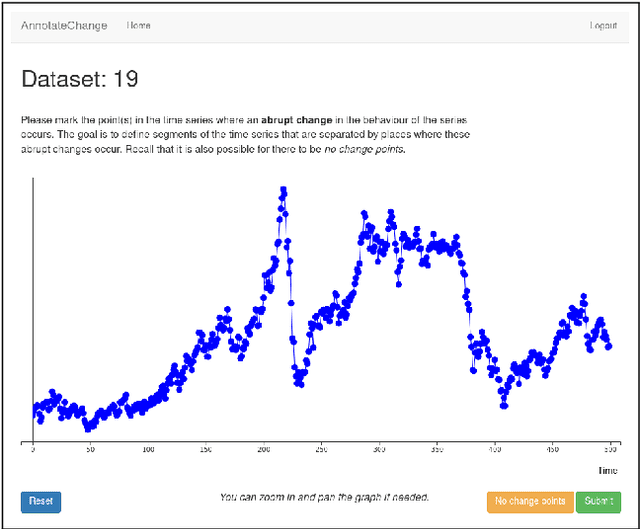

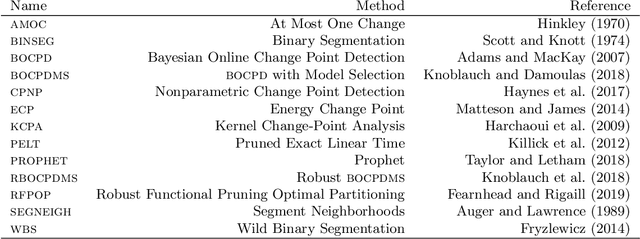

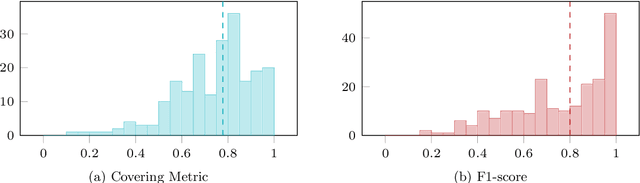

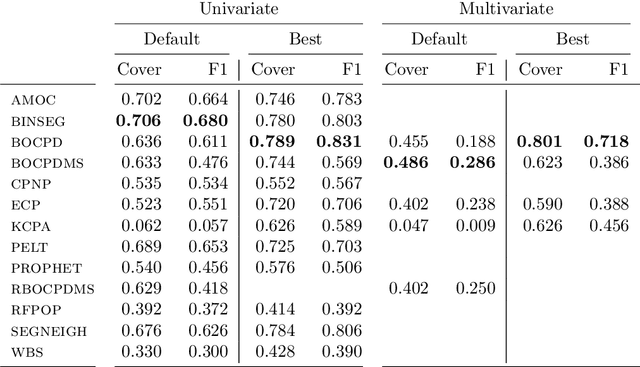

Change point detection is an important part of time series analysis, as the presence of a change point indicates an abrupt and significant change in the data generating process. While many algorithms for change point detection exist, little attention has been paid to evaluating their performance on real-world time series. Algorithms are typically evaluated on simulated data and a small number of commonly-used series with unreliable ground truth. Clearly this does not provide sufficient insight into the comparative performance of these algorithms. Therefore, instead of developing yet another change point detection method, we consider it vastly more important to properly evaluate existing algorithms on real-world data. To achieve this, we present the first data set specifically designed for the evaluation of change point detection algorithms, consisting of 37 time series from various domains. Each time series was annotated by five expert human annotators to provide ground truth on the presence and location of change points. We analyze the consistency of the human annotators, and describe evaluation metrics that can be used to measure algorithm performance in the presence of multiple ground truth annotations. Subsequently, we present a benchmark study where 13 existing algorithms are evaluated on each of the time series in the data set. This study shows that binary segmentation (Scott and Knott, 1974) and Bayesian online change point detection (Adams and MacKay, 2007) are among the best performing methods. Our aim is that this data set will serve as a proving ground in the development of novel change point detection algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge